AssertJ Core: Quality & Evolution

In our previous essay, we focused on the architecture of AssertJ Core and examined how architectural decisions influence the quality attributes. We begin this essay by summarising the degree to which these attributes are currently satisfied, and then we focus on the different means through which the quality of AssertJ Core is safeguarded. This includes quality assurance steps taken, means of automating these steps with CI, as well as an analysis of hotspot components and technical debt.

The table below shows seven quality attributes (defined in the ISO standards1) that are relevant to AssertJ Core and their realisation within the system.

Software Quality Processes

The software development processes used in a project have impact on the software quality2. The software quality (assurance) process spans the entire development process and focuses on requirement analysis (creation of issues) as well as the testing of these requirements3. Since AssertJ Core is an open-source project, this process needs to be communicated to all the contributors. This is done through the CONTRIBUTING.md file. Eight out of thirteen of these rules refer to testing, ranging from naming conventions to the structure of the tests.

Besides the testing, there is an emphasis on documentation. For each assertion, it is required to have Javadoc containing both passing and failing examples and these can be added to the separate examples repository4. Having this clear documentation not only clarifies the code but also provides users of AssertJ Core with the guidance they need. Finally, code formatting is specified with a custom Eclipse Formatter profile and requires that static imports are used when it makes the code more readable.

Next to rules for the code itself, there are also some defining proper git usage and change integration. Additionally, there are templates for issues and PRs containing checklists to verify that the contributor did not forget any standard steps.

Quality Culture

Borrowing a definition from Software Quality Assurance5, “quality culture is the culture that guides the behaviours, activities, priorities, and decisions of an individual as well as of an organisation”. To assess the quality culture of AssertJ Core, we browsed through the extensive repository and examined the discussions on issues and PRs.

At the time of writing, there were 110 open issues and 35 open PRs. The development of AssertJ Core is very active, with 1054 closed issues and 1297 closed PRs. The analysed issues and PRs can be found in the table below.

| Issue | Status | PR | Status |

|---|---|---|---|

| #1195 | Closed | #255 | Accepted |

| #1504 | Closed | #1093 | Accepted |

| #1418 | Closed | #1460 | Accepted |

| #1002 | Closed | #1486 | Open |

| #148 | Closed | #1498 | Accepted |

| #971 | Open | #1754 | Open |

| #1621 | Open | #1871 | Open |

| #1104 | Open | #2019 | Accepted |

| #3 | Open | #2086 | Draft |

| #2060 | Open | #2171 | Draft |

One important part of the quality culture is the way the repository is set up. There are 28 labels which are being used for both the issues and PRs. Perhaps the most interesting ones are good first issue (easy issues that can be picked up by developers not familiar with the AssertJ Core architecture), for: team-attention (frequently used when the maintainers need to reach a decision together), and Investigation needed.

The communication with maintainers is swift (#2515) and they often cooperate closely together (#2171). The style is taken into account during code reviews (#1871). While there are some PRs that have been open for a long time (#1486), these contain big changes and do not reflect the usual workflow of AssertJ Core. From looking at the repository, one gets a sense that the maintainers carefully consider the quality of the software and relentlessly encourage such an approach in all contributors.

Test Processes & Test Coverage

AssertJ Core has established a set of strict guidelines when writing tests. These guidelines could be loosely clustered into three levels: code, test, and formatting.

On the code level, the guidelines suggest using package-private visibility for test classes and methods. While JUnit5 allows all classes to be anything but private, the default package visibility is preferred as it improves code readability6.

On the test level, the guidelines suggest writing a test class for each assertion method. They encourage testers to use existing assertions from AssertJ Core and require separate tests for a succeeding and a failing assertion.

On the formatting level, the guidelines contain strict rules for naming conventions. There is a preference for naming with the underscore-based style (snake case). This improves readability, since snake case was proven to be read at a pace 13.5% faster when compared to reading camel case7.

Another pointer to AssertJ Core’s rigorous testing procedure is the test coverage. According to the statistics of the SonarCloud accessible directly on the GitHub repository of AssertJ Core, the test coverage for the main branch reaches 92.1% line coverage and 90.0% condition coverage. Test coverage also plays a role in QualityGate, a feature of SonarCloud that allows developers to set rules for a quality check of newly added code.

Test coverage does not guarantee that the code is properly tested, but a high percentage of line and condition coverage is a good indicator of high standards of reliability. To further improve the quality of its test suite, AssertJ Core makes use of mutation testing with PIT. Where test coverage cannot measure the quality of the tests, mutation testing works well for assessing the test suite8.

Continuous Integration

Defining rules for contributors is the first step toward gaining software quality in an open-source project. However, people make mistakes and checking the conformance to the rules manually can be a lot of work. This is why many projects nowadays use a continuous integration (CI) pipeline. Such a pipeline makes it easier to accept contributions without losing code quality9.

AssertJ Core uses GitHub Actions10 and has 12 checks distributed over 7 workflows. The main workflow contains the CI and is performed on each push and pull request. The workflow has two steps: test_os and sonar, which run the following commands respectively.

./mvnw -V --no-transfer-progress -e verify javadoc:javadoc

./mvnw -V --no-transfer-progress -e -Pcoverage verify javadoc:javadoc sonar:sonar

-Dsonar.host.url=https://sonarcloud.io

-Dsonar.organization=assertj

-Dsonar.projectKey=joel-costigliola_assertj-core

In both steps, first the correct Java version is set up. The first command runs the tests on Ubuntu, macOS, and Windows and the second command runs the tests while measuring the coverage. The only difference for measuring the test coverage is the added sonar:sonar plugin and some flags that it requires. This allows the CI to upload the results to the project on SonarCloud. The developers and maintainers can analyse the report there. The test coverage is only measured on pushes, not when an activity happens on a pull request.

Besides the test coverage, Sonar also analyses the code quality on, e.g., duplicated lines and maintainability. In the project on SonarCloud, a few rules are defined that put constraints on the measured metrics. For example, the test coverage of new code should be at least 80% and the percentage of duplicated lines of code has to be lower than 5%. Whether these constraints pass is displayed with the Quality Gate badge in the README.

For many projects, a style check is included in the CI pipeline. While AssertJ Core defines an Eclipse Formatter profile, the CI does not enforce this formatting. Adding a style check with a similar configuration might improve the styling of the code that is created by external contributors. To that end, we made a PR that uses spotless in the CI.

Hotspots & Code Health

To understand the code quality of the problematic components, we have dived into AssertJ Core’s code hotspots, i.e. areas in the project where changes and defects occur more often than elsewhere. These may indicate “areas of the source code that need improvement”11 and are thus areas of interest with respect to code quality.

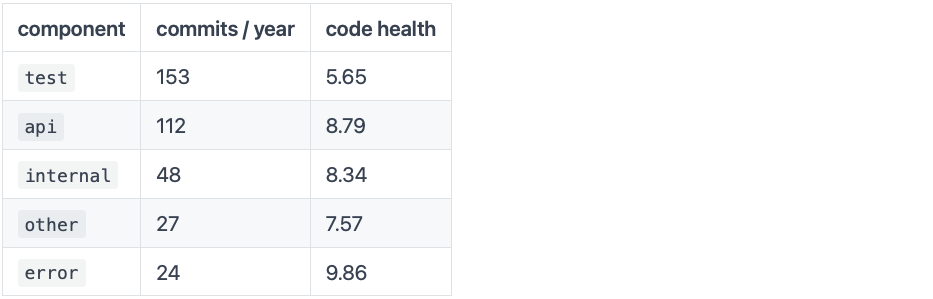

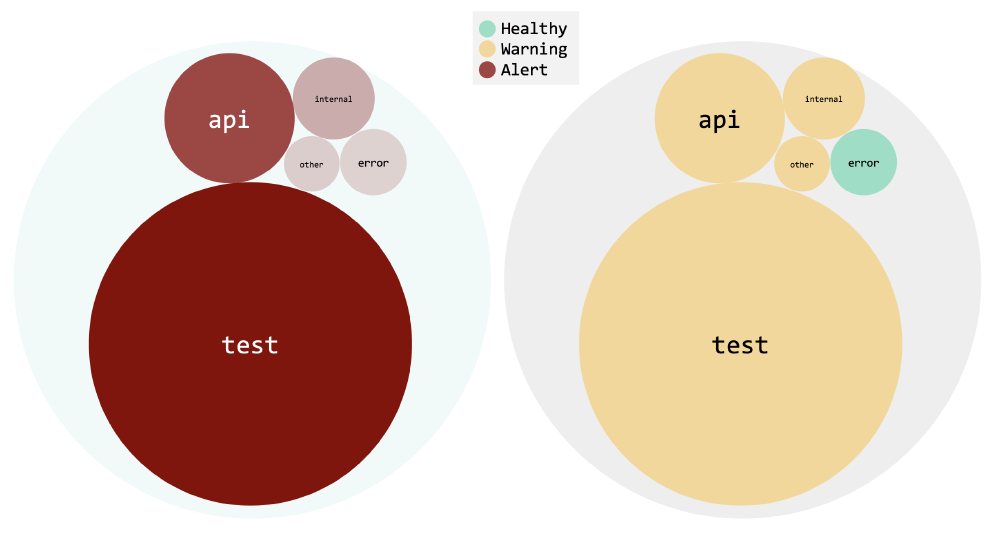

To obtain such a detailed analysis, we used a tool called CodeScene. We configured the tool to consider five architectural components: the api, internal, and error packages, all other production code packages under other, and the test suite. The generated technical health report12 is summarised in the following table and graphs where CodeScene awarded health scores between 0 (bad) and 10 (good):

Figure: The hotspots (left) and the code health (right) by component.

Test Code

The top hotspot in the codebase is the test suite. This makes sense, since one often needs to write many tests for one implementation, making more changes with every feature than anywhere in the production code. Test code can be full of duplication, have lower performance, and use different code quality standards than production code13. Most of these are bound to trigger one of the code health factors that CodeScene checks for.

The majority of the test code files within the project have relatively good code health, with scores above 9. There seems to be a particular spot, however, where the health reaches very low points: the tests surrounding SoftAssertions. Here, the two main test classes have scores of 3 and 4 with pointers towards errors such as file size issues, large methods, large assertion blocks, and potentially low cohesion. All of these can be seen in the file itself, where a large method with about a hundred assertions dominates.

Production Code

The more relevant hotspots occur within the production code. While error and other have a reasonable number of commits per year, internal and api are more actively edited and have lower health scores. This is, of course, because these are the packages that operate the main functionality of AssertJ Core.

The unhealthiest file is the RecursiveComparisonDifferenceCalculator from the api package, which triggers five alerts and five warnings, most referring to a single “brain” method with high complexity and duplicate function blocks. The method determines the recursive differences between objects using code with excessive cyclomatic complexity:

private static List<ComparisonDifference> determineDifferences(Object actual, Object expected, FieldLocation fieldLocation,

List<DualValue> visited,

RecursiveComparisonConfiguration recursiveComparisonConfiguration) {

...

while (comparisonState.hasDualValuesToCompare()) {

...

// Custom comparators take precedence over all other types of comparison

if (recursiveComparisonConfiguration.hasCustomComparator(dualValue)) {

if (!areDualValueEqual(dualValue, recursiveComparisonConfiguration)) comparisonState.addDifference(dualValue);

// since we used a custom comparator we don't need to inspect the nested fields any further

continue;

}

if (actualFieldValue == expectedFieldValue) continue;

...

// we compare ordered collections specifically as to be matching, each pair of elements at a given index must match.

// concretely we compare: (col1[0] vs col2[0]), (col1[1] vs col2[1])...(col1[n] vs col2[n])

if (dualValue.isExpectedFieldAnOrderedCollection()

&& !recursiveComparisonConfiguration.shouldIgnoreCollectionOrder(dualValue.fieldLocation)) {

compareOrderedCollections(dualValue, comparisonState);

continue;

}

...

// compare Atomic types by value manually as they are container type and we can't use introspection in java 17+

if (dualValue.isExpectedFieldAnAtomicBoolean()) {

compareAtomicBoolean(dualValue, comparisonState);

continue;

}

if (dualValue.isExpectedFieldAnAtomicInteger()) {

compareAtomicInteger(dualValue, comparisonState);

continue;

}

if (dualValue.isExpectedFieldAnAtomicIntegerArray()) {

compareAtomicIntegerArray(dualValue, comparisonState);

continue;

}

...

}

return comparisonState.getDifferences();

}

Another set of hotspots with low code health are the classes within internal which focus on assertions for often-used, complex data types such as Map, Iterables, or Classes. These all have many conditionals as well as complex methods with code duplication. The fact that some have to be implemented on the abstract level without specific implementation knowledge also contributes to this complexity.

Finally, there is also increased and deeply nested complexity in the StandardComparisonStrategy class within the internal package. This mostly stems from the universality of the class - it is used for the comparison of most JDK types, meaning that it often requires if statements or other workarounds to get to the specific comparisons needed. This disadvantage of abstract implementations seems to manifest within AssertJ Core in various places.

Technical Debt

Technical debt is defined by SonarCloud as the “effort to fix all code smells”14. It thus measures the time necessary to fix all accumulated maintainability issues. This value is of interest, as the presence of debt might make it more difficult to understand and contribute to the code without introducing more issues.

The figure below shows the technical debt analysis performed with SonarCloud:

Figure:

The debt distribution of the main module. The test module follows the same distribution.

It is not surprising that the debt is concentrated in the main pillars of the software architecture, as these packages contain the most functionality and logic for AssertJ Core. However, this debt can then also cause the most damage. This is because the packages are the target of most new contributions. These may become more difficult to add as the debt accumulates and are likely to introduce more code smells.

The technical debt of AssertJ Core may seem very high in its absolute form. However, the debt ratio (between the cost of developing and fixing) was only about 2.9%. Considering that it would only become cheaper to write the whole code from scratch when the ratio reaches 100%, we can safely say that AssertJ Core has accumulated very little technical debt. The debt was evaluated by SonarQube with an ‘A’ grade, which reflects that AssertJ Core manages to maintain a high standard of code quality in terms of technical debt.

References

-

ISO 25010. (n.d.). Retrieved March 16, 2022, from https://iso25000.com/index.php/en/iso-25000-standards/iso-25010. ↩︎

-

Ashrafi, N. (2003). The impact of software process improvement on quality: in theory and practice. Information & Management, 40(7), 677-690. https://doi.org/10.1016/s0378-7206(02)00096-4 ↩︎

-

Software QA Process: Stages, Setup, Specifics. Scnsoft.com. (2022). Retrieved 16 March 2022, from https://www.scnsoft.com/software-testing/qa-process. ↩︎

-

GitHub - assertj/assertj-examples: Examples illustrating AssertJ assertions. GitHub. (2022). Retrieved 16 March 2022, from https://github.com/assertj/assertj-examples. ↩︎

-

Quality Culture. (2017), 35-65. https://doi.org/10.1002/9781119312451.ch2 ↩︎

-

Java static code analysis: JUnit5 test classes and methods should have default package visibility. Rules.sonarsource.com. (2022). Retrieved 16 March 2022, from https://rules.sonarsource.com/java/RSPEC-5786. ↩︎

-

Binkley, D., Davis, M., Lawrie, D., & Morrell, C. (2019). To CamelCase or under_score. 2019 IEEE/ACM 27Th International Conference On Program Comprehension (ICPC). https://doi.org/10.1109/icpc.2019.00035 ↩︎

-

PIT Mutation Testing. Pitest.org. (2022). Retrieved 16 March 2022, from https://pitest.org/. ↩︎

-

Vasilescu, B., Yu, Y., Wang, H., Devanbu, P., & Filkov, V. (2015). Quality and productivity outcomes relating to continuous integration in GitHub. Proceedings Of The 2015 10Th Joint Meeting On Foundations Of Software Engineering. https://doi.org/10.1145/2786805.2786850 ↩︎

-

Features • GitHub Actions. GitHub. (2022). Retrieved 16 March 2022, from https://github.com/features/actions. ↩︎

-

Snipes, W., Robinson, B., & Murphy-Hill, E. (2011). Code Hot Spot: A tool for extraction and analysis of code change history. 2011 27Th IEEE International Conference On Software Maintenance (ICSM). https://doi.org/10.1109/icsm.2011.6080806 ↩︎

-

https://drive.google.com/file/d/1Da9S2w3lM5CyacSrhiIW_wA-6JF1pprM/view?usp=sharing ↩︎

-

How To Deal With Test And Production Code | HackerNoon. Hackernoon.com. (2022). Retrieved 16 March 2022, from https://hackernoon.com/how-to-deal-with-test-and-production-code-c64acd9a062. ↩︎

-

SonarSource. (2022). Metric definitions. SonarCloud Docs. Retrieved March 16, 2022, from https://docs.sonarcloud.io/digging-deeper/metric-definitions/ ↩︎