Robot Framework - Evolution of Quality

Robot framework (RF) is a robotic process automation tool designed to make QA personnel’s jobs easier. Since the focus of this project is to integrate with as many external systems (to be tested) as possible, a lot of effort goes into making the code optimally interpretable. The architectural decisions and the vision which shapes those decisions have been discussed in our previous posts. This essay talks about the code quality of the open-source system and the various processes which are a part of the continuous integration effort for this project. In this essay, we analyze the development strategies and investigate the quality standards.

Overall quality process

There is no hard set of rules that one needs to follow in order to make contributions. Rather there are a few guidelines 1 that ensure a smooth integration of new features, enhancements to the existing code. These include contributing to the documentation and answering questions on the robotframework-users mailing list and other relevant forums.

They suggest creating issues before beginning work on any bug identified or a feature to be added. In case there are questions about the relevance of the prospective contributions, developers are requested to ask on the mailing list, Slack 2, or any other appropriate forum.

Following that, creating issues enables constructive discussions about the proposed changes and prevents redundancy. It has been observed that such discussions often help in the coverage of edge-cases.

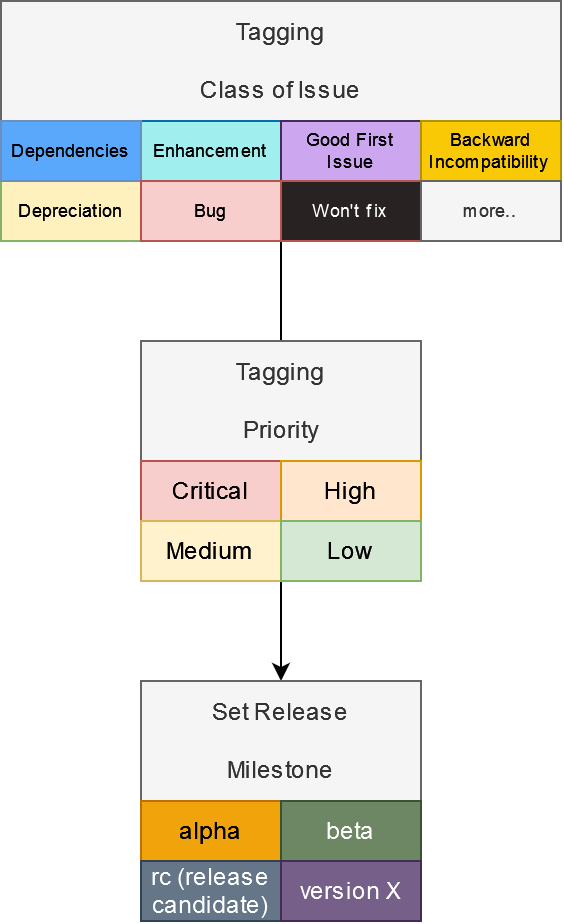

While creating the issues, the emphasis is on their proper labeling. The labeling is taken care of by the lead developers. Based on the criticality of the issue discovered, priority tags are attached to them. Based on functionality, issues are classified as bugs, dependencies, etc. The issues are also tagged to targeted milestones. The said milestone can only be reached if its corresponding issues are marked as closed.

Figure: Tagging methodology

Upon bug detection, a comprehensive report is expected to enable reproducibility 3. For enhancements, they stress on explaining the use-case and properly documenting everything related to the change. To encourage new contributors, RF has a separate list of easy issues labeled good first issue.

The expected code conventions when raising a pull request are likewise well documented 4 and expected to be followed.

Continuous Integration Process

Continuous Integration (CI) in the Robot framework is built on a pipeline that runs after each commit push on a pull request and also before merging the pull request. 5 The CI run is not executed for a specific release; rather, it is released following the CI run for the most recent commit made for that release.

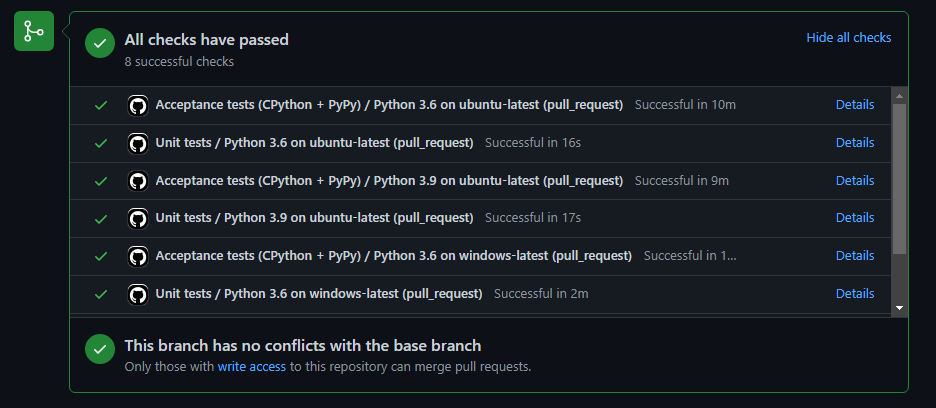

Figure: Pull Request CI Pipeline

The pipeline includes multiple checks involving acceptance as well as unit tests, which evaluates the code on different versions of Python for different operating systems such as Ubuntu and Windows.

The pipeline comprising acceptance and unit tests is only done for code changes committed to any pull request and for documentation updates such as changes to .rst files it is not triggered.

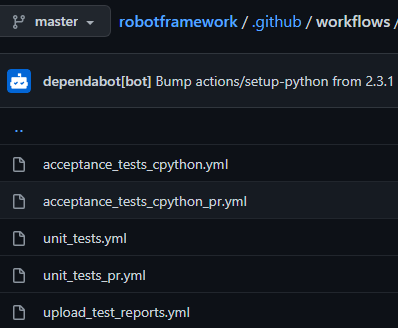

The CI pipeline is defined using GitHub workflow 6 , in which the workflows are specified as YAML files in the repository and are triggered using dependabot 7 on an event of a new commit push or merged pull request. These YAML files are separate for acceptance tests, unit tests and to publish test execution reports to the corresponding pull request.

Figure: Dependabot YAML configuration files

As RF is an automation testing framework with sporadic build releases for users, the continuous deployment pipeline is only triggered for changes in the robot framework documentation website 8. The documentation contains the keywords for various RF Libraries with examples. A different pipeline is triggered to deploy to PyPi 9 whenever a new release happens.

Rigour of the testing process

Testing for every software tells us what it does and how well it accomplishes its job. The goal is to identify bugs, gaps, or missing requirements as compared to the actual specifications. It holds for a testing framework as well!

As stated in RF’s contribution guidelines 10, when submitting a pull request with a new feature or a fix, it must be subjected to one or more acceptance tests. As for the internal logic, unit tests should be added wherever required.

The basic expectation is that individual tests do not break the existing code flow. The tests should run successfully on a variety of interpreters (e.g. pypy3) and environments. RF allows running the tests manually. Additionally, pull requests are tested automatically on continuous integration.

RF’s acceptance tests are written in RF itself and can be run with the run.py script. It is used as follows:

atest/run.py [--interpreter interpreter]

The unittest module 11 in Python is used to implement RFs unit tests. The script run.py may be used to execute all unit tests. The run.py script finds and executes all test cases with the prefix test_.

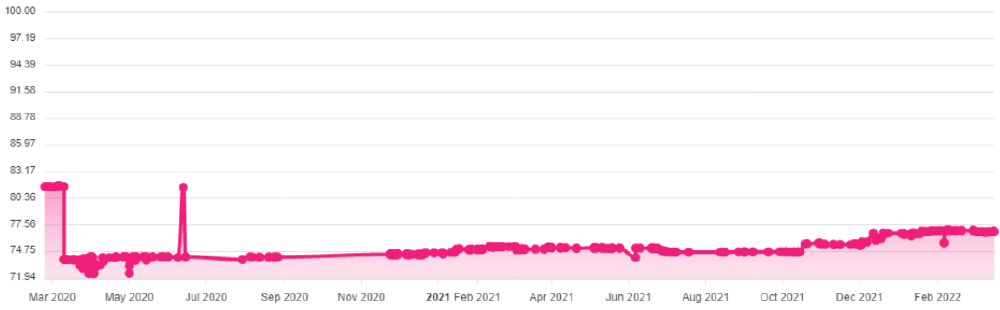

Also Codecov 12 is used to run code coverage over the github repository for every pull request. It is a measure (in percent) of the degree to which the source code of a program is executed when a particular test suite is run 13. The current code coverage of RF is 76.87% as per Codecov. 14

Figure: Code coverage

Hotspot components

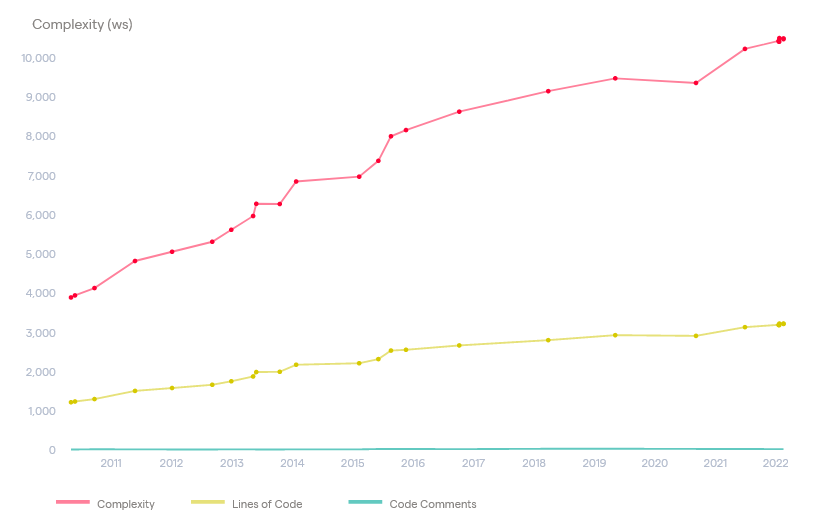

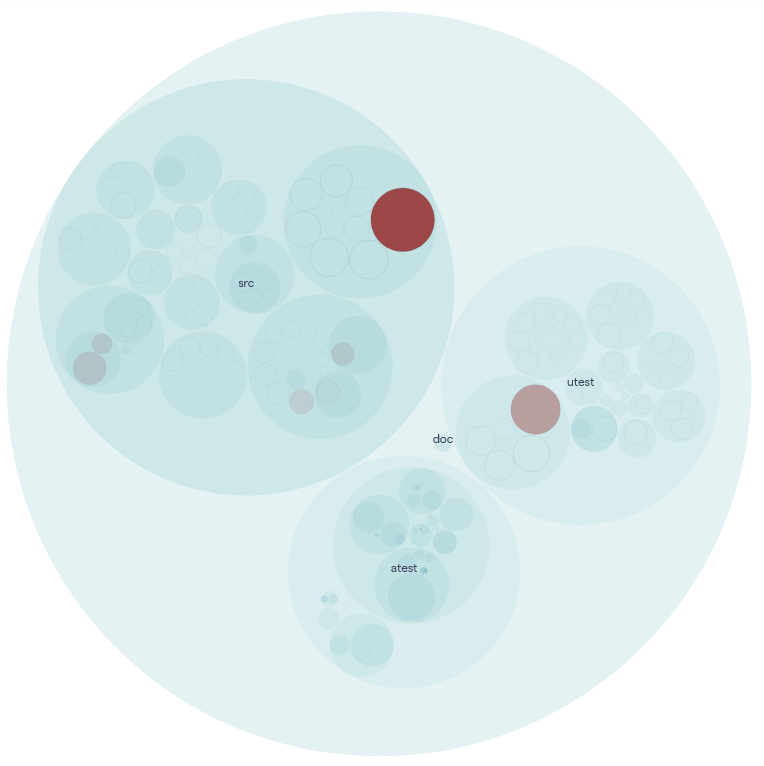

A Hotspot analysis helps us identify the modules where we are spending the most of our development time. We used the Codescene tool 15 to analyze the hotspots for the RF codebase. In this section, we would highlight a few of these hotspots through the visual representation and also discuss a bit on possible causes and implications.

Figure: Hotspot Analysis of Robot Framework Codebase

Upon inspection of the code at the root level, the highest level of hotspot exists in src of the code followed by utest and finally atest. Looking inside src, we see the major hotspots are in modules of libraries, running, and parsing code sections.

libraries are responsible for providing necessary methods for every extension that’s built for the RF. running provides Python scripts that enable the test running setup and customization, the heart of any automation testing tool, and parser has the crucial task of interpreting tokens and keywords provided by the user.

Finally looking at individual python files such as BuiltIn.py which is part of the libraries subsection has 3230 lines of code. With a steady increase in its development activities over the years, complexity has also increased at an even higher rate. The code complexity increase can be attributed to the addition of better error handling, new methods (eg. Using a dictionary in a function for the first time), increased conditional statements to support larger use cases and extensions.

Figure:

Trend Analysis of BuiltIn.py

Looking at the past we see many of these hotspots have evolved to match technology standards and needs. A few examples include integration of robot.api.parsing in parsing module with RF version 4.0 for improved extension support 16, another example is switching the code to Python 3+ from 2+. Additionally while some old hotspots remain active till date, others are no longer under constant development.

On the other hand, looking towards the future we believe many of these hotspots like BuiltIn.py will continue to remain hotspots. This is so because the code needs to adapt as the RF tool expands and more functionalities and extensions are added.

Code Health

- Low Cohesion: Cohesion is a measure of how well the elements in a file belong together. Low Cohesion is problematic since it means that the module contains multiple behaviors. For example, consider a Python file that has both I/O and algebraic operation functions.

- Bumpy Road: A Bumpy Road is a function that contains multiple chunks of nested conditional logic inside the same function. This leads to an increased load on working memory, expensive refactoring.

- Modularity Issue: When a single file has a lot of functions it becomes gigantic and hence difficult to test.

- Code Duplication: Duplicated code often leads to code that’s harder to change since the same logical change has to be done in multiple functions.

- Large Method: Overly long functions make the code harder to read.

Figure: Robot Framework Code Health Analysis

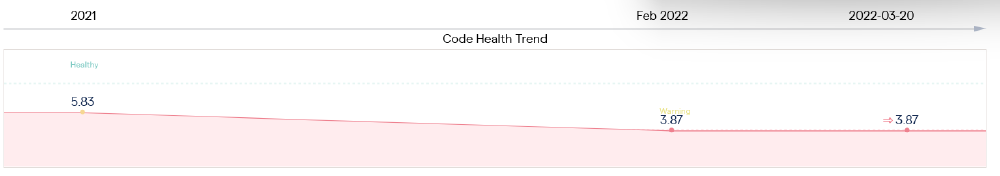

However, there are many components of the Robot Framework’s codebase that suffer low health, here we are highlighting the hotspot BuiltIn.py.

BuiltIn.py file suffers from low cohesion because of many kinds of functions in a single file such as converter functions, dictionary-related functions, comparative functions, etc. This makes the overall testing process difficult since the module doesn’t have a definite purpose. A solution could be to create separate files dedicated to different operations.

It also suffers from bumpy road issues because many of its functions such as _Verify.should_not_contain has a lot of conditional statements. A solution is to break the function into further well-categorized functions.

Other issues include modularity issue (3230 lines of code), code duplication (eg. _Verify._should_be_equal, _Verify.should_not_be_equal are similar kinds of functions, they may be combined with flagging)

Another interesting insight of this file is that overall code health has decreased with time, highlighting the need to rethink the approach for contents in this file.

Figure: Code Health Timeline for BuiltIn.py

Overall the RF code base health index provided by CodeScene is 6.5/10 revealing that much improvement in terms of code health is needed.

Quality Culture

RF, being an open-source system, encourages contributors of various skill levels. While so many people contributing to the code base is indicative of the framework’s growth, maintaining code quality is of utmost importance for the sustenance of the project. The lead developers, while open to suggestions and inputs from myriad sources, constantly steer the direction of the development in issues and PR discussions on GitHub, Slack etc.

- The lead developers request contributors to make sure all of their changes are accompanied with appropriate tests. This can be seen in multiple PR comments like in PR#4007. Same is observed in issue discussions like issue#3985, issue#4156.

-

Every fix is strategically included in the appropriate version keeping in mind larger compatibility issues. The discussions in issue#4086, PR#4085 illustrate this.

-

Keeping in mind the larger context, every change is checked for optimization at every level possible. Examples of the same can be found in PR#4126, issue#4095, and issue#4068.

-

A lot of effort goes into making the code more readable. Changes are regularly checked for the coding format and variable’s naming standards (PEP-8)17. The following discussions illustrate the same: issue#4229, PR#4147.

-

The importance of documentation for RF cannot be overstated. Because RF is an open-source system, its effectiveness is built on users’ understanding of the framework and its capabilities. The issue that we volunteered to fix was a document fix issue that was raised as a critical marked issue by the lead developer, demonstrating the significance of appropriate documentation in RF. For instance, issue#4260.

Technical Debt

Technical debts in software are more akin to lugging the dead weight along, which may not hinder development but certainly slows it down. Tools like SonarQube 18 , assist us uncover technical debts in any software project and grade the product’s maintainability based on the predicted debt ratio.

SonarQube discovered 727 issues in Robot Framework, 651 of which are classified as technical debts. The atest and utest modules in the source code are responsible for the majority of the technical debts. As acknowledged by the lead developers, the said modules are a bit muddled right now. The clean up for the same, will most likely be prioritized at some point in the future.

The overall debt ratio of the Robot Framework GitHub project is roughly 0.2 percent, which is extremely low, and the code is in good shape in terms of technical debt. In addition, the total manpower required to resolve those technical debt concerns is as little as ten days. With all of this, we can infer that 0.2 percent technical debt for a testing framework is quite acceptable in terms of quality, with the majority of the debt coming from the

Figure:

Technical Debt Tree Map

atest and utest modules.

References

-

RobotFramework CONTRIBUTING.rst https://github.com/robotframework/robotframework/blob/master/CONTRIBUTING.rst ↩︎

-

Robot Framework’s Slack community https://robotframework.slack.com/ ↩︎

-

Reproducibility Section of CONTRIBUTING.rst https://github.com/robotframework/robotframework/blob/master/CONTRIBUTING.rst#reporting-bugs ↩︎

-

Coding conventions section of CONTRIBUTING.rst https://github.com/robotframework/robotframework/blob/master/CONTRIBUTING.rst#coding-conventions ↩︎

-

Robot Framework CI workflows https://github.com/robotframework/robotframework/tree/1e14ff2783078ab9404fb9ecb0a29465b10f37fa/.github/workflows ↩︎

-

Github Workflow https://docs.github.com/en/actions/learn-github-actions/understanding-github-actions#workflows ↩︎

-

GitHub Dependabot https://docs.github.com/en/code-security/dependabot ↩︎

-

Robot Framework https://robotframework.org/robotframework/ ↩︎

-

PyPi of Robot Frameworkhttps://pypi.org/project/robotframework/ ↩︎

-

Tests section of CONTRIBUTING.rst https://github.com/robotframework/robotframework/blob/master/CONTRIBUTING.rst#tests ↩︎

-

Python’s Unit Test module https://docs.python.org/3/library/unittest.html ↩︎

-

CodeCov.io https://about.codecov.io/ ↩︎

-

Code Coverage https://en.wikipedia.org/wiki/Code_coverage ↩︎

-

Robot Framework’s CodeCov dashboard https://app.codecov.io/gh/robotframework/robotframework/ ↩︎

-

Code Scene tool https://codescene.com/ ↩︎

-

robot.api.parsingmodule documentation https://robot-framework.readthedocs.io/en/master/autodoc/robot.api.html#module-robot.api.parsing ↩︎ -

PEP 8 Style guide for Python https://peps.python.org/pep-0008/ ↩︎

-

Sonar Qube tool https://www.sonarqube.org/ ↩︎