Audacity’s Scalability Challenges

Audacity, as an application has to deal with many challenges such as managing or importing files of different sizes, and processing various audio editing related tasks. These are all handled by different algorithms, and in this section, we try to identify any scalability issues related to them.

Feature Scaling

A core principle which comes to mind when considering an open-source project, is how easy it is to contribute and integrate new features. In the case of Audacity, due to its ever-evolving nature, a large community contributing to the project and a large amount of technical debt, its architecture suffers from tight coupling1. Adding or altering features scattered across multiple source files inevitably leads to a large wave of refactoring across a large part of the code base (see essay 3)2. The Audacity team and community have constantly looked into methods to alleviate this burden, such as more modular design choices, splitting the audio processing functions and GUI, to mitigate the downsides of the tightly coupled classes.

File Management

One of the fundamental features the Audacity team had to design around is how audio files are handled. Importing, exporting and managing audio files with minimal delay is a must-have to gain an edge over competition, and dictates how performing and usable an audio editor is. These core functionalities must have a quick and snappy response, without getting in the way of a user’s creativity3.

Long Audio Files

A specific case-scenario consists of the management of long audio segments. These segments vary in duration between a number of seconds for a clip or a number of hours for a podcast or video. Ensuring that audio files of varying sizes are handled without unnecessary difficulties and delays is a key challenge to overcome for any audio editing application. A realistic and critical example of this challenge would be how Audacity handles memory usage when a editing an audio file in the middle of a track. Observing how the software performs in such a circumstance can give a good idea of the scalability of the application. Further in this essay, we observe, empirically analyze and discuss Audacity’s solution to this problem.

Applying Effects

One of the most frequently requested features by the community is the addition of real-time effects. Currently, the Audacity software does not provide any multi-core functionality for the application of real-time effects on audio tracks. In a realistic use-case, a user must initially wait for the effect4 to be applied to an entire track before being able to interact with the software again. For smaller clips, the time required is minimal. For larger clips, such as podcasts or movies, this waiting time can become substantial, and can strongly affect the use of the software for production-related purposes where delivery time is often a key aspect. The problem only multiplies if several effects must be applied.

Scalability Empirical Quantitative Analysis

In this section, we provide an empirical analysis of Audacity’s performance on files of different lengths. We compare the importing, exporting, and application of effects on the different files. Each test was conducted on the same machine, as to avoid any hardware related inconsistencies while doing the empirical study of applying effects (Reverb, Compressor and Echo) on different lengths of audio tracks.

Results:

| Test | Test 1 | Test 2 | Test 3 |

|---|---|---|---|

| Length (hours) | 1 | 2 | 3 |

| Size(mb) | 57 | 114 | 171 |

| Import time (sec) | 8 | 23 | 41 |

| Export time (sec) | 17 | 33 | 55 |

| Reverb effect (sec) | 15 | 35 | 50 |

| Compressor effect (sec) | 25 | 42 | 90 |

| Echo effect (sec) | 7 | 22 | 25 |

As seen in the table above, doubling the length of the input file results in almost a 2.3 factor increase in the time for processing the studied effects. Similarly, tripling the time of the input audio file results in an increase of processing time by factors above three. This shows that the Audacity app doesn’t scale up linearly which might cause problems if the user wants to process long audio files.

Scalability from an Architectural Point of view

In this section, we present three architectural decisions that affects the scalability of Audacity. The first one boosts scalability while the second one can be improved and the last one hinders scalability substantially.

BlockFiles - An Ideal Solution

Without BlockFiles, editing long audio files becomes difficult. Firstly, the entirety of a long audio clip of multiple hours cannot necessarily fit in the RAM, due to the file sizes ranging in the 100-1000MB, and secondly, inserting a single effect or clip requires the clip to be copied to the RAM, edited, then moved back to the drive, wasting precious system resources. Even worse, if a clip is copied into another, both must be loaded into memory5.

To overcome these issues, Audacity pioneered the BlockFiles library. This library attempts to strike a balance between two core ideas, the ability to edit large files while reducing the number of fetch from disk operations required. BlockFiles achieve this balance by breaking up the audio files into blocks, and stores them efficiently on the disk through a proprietary XML-based file system (.AUP). In a realistic use-case, if a user wishes to delete one block from the audio file, then no copy calls will be performed, as the BlockFiles will simply read the content of the specific block in question. By employing this library, Audacity is able to edit large files without any noticeable significant delays5.

Multi-threaded Real-time effects

Applying real-time effects on (long) audio tracks can take up a lot of time. Especially if many effects are stacked on top of each other. The current implementation relies on a multithreaded paradigm to achieve the results. A master thread schedules worker threads to work on batches of samples, meaning that the worker threads are tasked to apply effects on its designated samples. This multithreaded approach is much more efficient than any linear single-threaded method but also has some drawbacks. As effects and track length scale up, the Audacity application will consume more and more processing power or it may require a lot more time before effect application is finalized. We think that there exist possible ways of improving this as will be described in the next section, by either a dedicated Graphics Processing Unit (GPU)6 or a Digital Signal Processor (DSP)7.

Dependency on External Libraries - PortAudio, AudioIO and wxWidget

At a lower level, the application is managed through three primary threads. One thread is in charge of retrieving data from computer audio devices and appending it to a buffer, one thread is used to process the buffered data, and one thread is in charge of GUI management and updates. This system would be an ideal solution if the threads had a more centralized implementation, where for example, the same abstract classes are used. Unfortunately, each thread logic is dependent on the library implementing it. The data placed into the gathering buffer is managed by the PortAudio library while the processing of the data is managed by the AudioIO library, with both of them having no centralized definitions. This decentralized approach might impose certain limitation to what it can be achieved by the Audacity team and community, whilst also adding unnecessary overhead to the application.

Proposals for architectural changes

Solution for dependencies

A potential solution to solve the need of external libraries is to build their own centralized implementation. However, this might be too costly in terms of time, resources and manpower, but it will definitely help the project in the long run. Right now, these dependencies are forcing the software to go through a lot of overhead due to unnecessary copying of data and the usage of threads. If correctly done, one can achieve higher speeds and better design by using fast data switch methods such as an interrupt-driven approach, that would have little to no negative impact on the code and performance of Audacity. This refactoring of the external libraries will also allow Audacity to reduce the amount of coupling between its classes, effectively increasing the possible ways the application can be expanded upon.

Dedicated Hardware for Real-Time effects

In order to overcome the slower computation speed of the mathematical operations amounting to a real-time effect, on the CPU, and overcome the threading limitation of the application, Audacity can include a module compatible for processing parallelizable tasks on a GPU6 or on a DSP7. As only a small subset of functionalities in Audacity are parallelizable, Audacity developers will need to group the respective audio processing effects which can be parallelized. If a hypothetical user chooses to apply an effect, Audacity can then pass a request to either the CPU or GPU (if existent on the machine). This architectural alteration will allow users, notably podcasters, and audio engineers with dedicated hardware to maximize their performance for high levels of processing.

The group of functionalities which can be processed on a GPU/DSP can consist of8:

- Compression

- Reverb effects

- Delays

- Limiters

- Auto-filters

- Custom effects

These functionalities consist of applying mathematical operations on a signal, which makes them prime candidates for parallelization on the GPU/DSP9. However, as not all machines are equipped with dedicated hardware, there should be an addition to the GUI preferences, which can let the user set the properties for which hardware to use. Additionally, the library can be expanded to consider the amount of work which is required, as to avoid unnecessary usage of the GPU for smaller tasks, where the transfer of data from the CPU to the GPU would take longer than not using the GPU/DSP altogether. The final element for the GPU usage is the library which is required to operate it. These normally come from the manufacturers directly, such as the Nvidia CUDA Toolkit10.

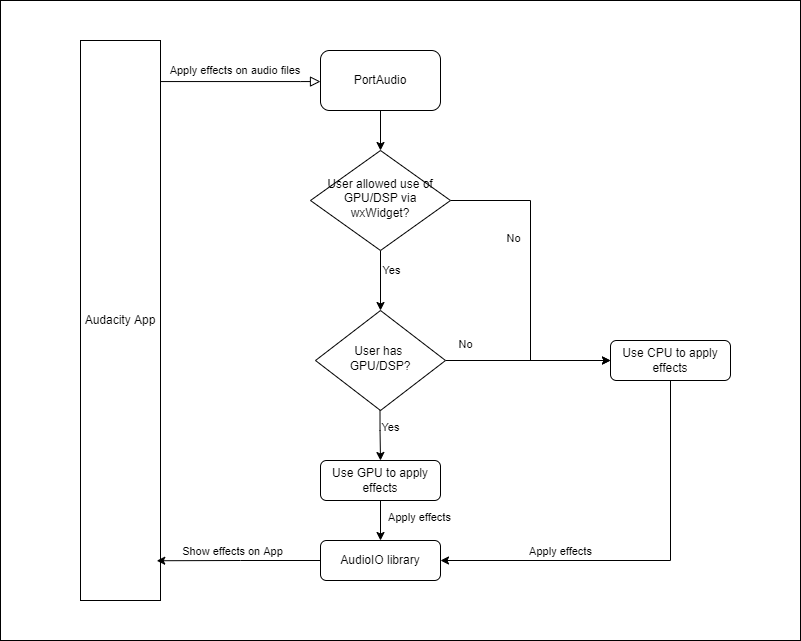

In the diagram below, we describe how effects on audio files is applied on Audacity at the moment with no integration of GPU/DSP.

Figure: Current workflow to process audio files on Audacity

In the diagram below, we describe how the workflow will work between all libraries responsible for audio processing in the proposed solution.

Figure: Proposed workflow to process audio files on Audacity using a GPU/DSP

Why dedicated Hardware for audio processing.

In the visual domain, GPUs are used to parallelize the processing of effects and rendering. These processing elements, notably for rendering, require real-time responses for continuous visual feedback. Along the same line, audio processing overlaps very closely to image processing, theoretically allowing the architectural change to achieve similar levels of real-time. Additionally, the decoupling from hardware allows for Audacity to later expand their capabilities on new machines which are bound to release, notably as the new Ryzen CPU’s come with dozens of the cores and threads.

References

-

https://desosa2022.netlify.app/projects/audacity/posts/essay-3/ ↩︎

-

https://manual.audacityteam.org/man/audacity_projects.html ↩︎

-

https://towardsdatascience.com/accelerated-signal-processing-with-gpu-support-767b79af7f41 ↩︎

-

https://stampsound.com/do-you-need-a-graphics-card-for-music-production/ ↩︎

-

https://www.ti.com/lit/an/spra053/spra053.pdf?ts=1648343728570&ref_url=https%253A%252F%252Fwww.google.com%252F ↩︎