Scalability in Backstage

One of the potential benefits from using Backstage is that it enables extensibility and scalability by letting you easily integrate new tools and services (via plugins), as well as extending the functionality of existing ones. Backstage, as described by its creators, is more like a visual tool for managing services in cloud platforms that also holds the documentation for those services. By then using cloud clusters to run Backstage, which can be entirely split up into separate services, it enables Backstage to become a fully scalable system. Backstage boasts it can serve thousands of developers, 1 and does so itself. 2

Figure: Backstage supports more than 1000 users

This essay would therefore be really short if only scalability was discussed, thus we have extended it with other interesting information about Backstage, such as its extensibility and maintainability. However the use of cloud clusters for enabling scalability also makes some discussion points, suggested by the course as interesting such as a proposal for architectural changes to make the system more scalable, not very informative to be addressed in this essay. As there were no issues identified that affect Backstage’s scalability, any change made to the system would lead to a decrease in the scalability of Backstage, therefore the last 3 discussion points suggested were left out of the essay. The points this essay does not discuss therefore are a proposals for architectural changes that can address the identified issues, diagrams depicting the “as is” and the “to be” architectural designs and an argument showing how the proposed changes will address the identified scalability issues.

The systems only and most likely scalability issues might be caused by the database that is used or the deployment environment. Most deployment environments however, like Kubernetes, Helm, Heroku, Amazon Web Services, Azure Cloud Services and Google Cloud Platform are designed to be fully scalable. And most databases over several options for scalability as well, such as sharding, declarative partitioning and synchronization. Therefore this should not lead to scalability issues and if they may arise a developer is recommended to switch to any other environment or database that overs better scalability.

Setting up Backstage in a fully scalable Kubernetes environment

Backstage is vendor-agnostic and is therefore deployable on various cloud platforms. (AWS, Google Cloud, Azure, etc…). Because Backstage is platform-agnostic, it does require some work to set deploy backstage. The user is expected to make their own Docker containers form their Backstage installation,3 and then follow one of the recipes in the documentation to deploy the container.

One of the main recommended deployment strategies is to set up Backstage as a Kubernetes cluster. We first create a namespace to isolate all Backstage services from the other services a system might be executing. We then add a PostgreSQL database service to our namespace, setting up any required security measures in the process. The database service can be setup such that it scales horizontally using sharding, declarative partitioning and PostgreSQL’s Foreign Data Wrapper. After this we bundle the React front-end of Backstage into static files that can be served by the backend. We then set up a service to run the backend of Backstage, which can be split into multiple instances that all serve the frontend and store and read any data needed in the database.

Adding a plugin to Backstage, would then create a separate service for the backend to interact with. These plugins when added create an interface between Backstage and a service which is not part of Backstage, this interface fully scales from the side of Backstage and is only limited by the scalability of the specific service implemented by the specific plugin.

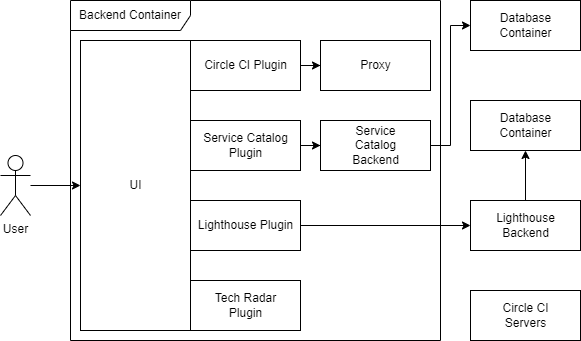

The following image depicts the current architecture of Backstage, which is designed to be fully scalable.

Figure: The current scalability architecture of Backstage

Required kubernetes resources

It can be very tempting to immediately set a cloud cluster to autoscaling, but it is also very important to also take into account the potential costs it may take to run a cluster. We will give a short overview of the considerations to take into account when making a Backstage deployment. It is very hard to make emperical measurement of the Backstage environment. The Backstage environment does have scaling in mind starting form its architectural decisions, but it is also very customizable. Spotify for example has over 100 custom plugins which they use. 4

First of let’s look at the Database layer. Backstage does not store massive amounts of data, but rather it incorporates data from many sources into one place. Also, the users of Backstage do not write into the database all too much. As Backstage is mostly meant for reading documentation, sharing service status and sharing project information, the backend can focus on the database read requests. Read focussed databases are generally speaking less resource intensive and thus cheaper to run.5 To even further minimize the cost for databases Backstage has a per plugin frontend and backend cache api. Next let us look at the frontend of Backstage. The frontend is written in React, a so-called static site, which means that the whole website is given to the user in one go. Static sites are generally very performant to host as the site does not need to be generated for every client.6 What does take up a lot of resources is the Backstage backend. The backend is a NodeJS backend and serves the API requests of the frontend, and interfaces with external services. The backend also serves the static site.

As said before Backstage is meant to be run in a cloud environment, and can therefore scale the backend up to large quantities.

Scalability considerations for plugins

It isn’t a farfetched proposition to declare Backstage itself a statement of scalability. As a company scales and needs to maintain multiple services, Backstage offers the solution for this problem. And out of this need comes also various design decisions that affect the scalability of the system.

The design considerations made by Backstage are specifically to let the project remain scalable. In fact, it is one of the hard requirements made by the developers. The project should be able to scale to hundreds of large packages without excessive wait times. To achieve this fact, it is only logical to start looking at how the plugins itself are structured.

new-plugin/

dist/

node_modules/

src/

components/

ExampleComponent/

ExampleComponent.test.tsx

ExampleComponent.tsx

index.ts

ExampleFetchComponent/

ExampleFetchComponent.test.tsx

ExampleFetchComponent.tsx

index.ts

index.ts

plugin.test.ts

plugin.ts

routes.ts

jest.config.js

jest.setup.ts

package.json

README.md

tsconfig.json

First of all, every plugin itself is a standalone project on its own. This has a lot of benefits for the development on Backstage. For instance, every plugin can be shipped and shared with other people easily, making Backstage very customizable for all parties involved. Secondly, because it is in itself a standalone package, the package will work in isolation, meaning that any crashes that will occur within a plugin will only crash the plugin itself and not the whole application. As a compromise, having boilerplate in all plugins can increase the size of the codebase. However, having this guideline will make it easier to maintain and actually help with scalability in the longer term.

But most importantly what this achieves is a composability system which allows the app to only load in the plugins when they are needed. This makes it so that the the application can scale to larger sizes without the need to load them all in at once. As one can imagine, this opens up the possibility to really set up Backstage without being hindered by the limitations of performance on a regular developer setup.

Another aspect on the plugin side that however can be argued as an aspect of scalability, if not extensibility, is the introduction of a marketplace for the plugins itself. The amount of plugins in existence is impossible to estimate, since every company that has adapted Backstage can create their own private plugins for their own use. A conservative estimate of over a thousand plugins is not even beyond possibilities. However, only a small fraction is available in the marketplace. As of writing, currently 78 plugins exist in the official marketplace itself. This does not cover all of the publicly available plugins since every plugin is a project on its own, it can be hosted on Github for everyone to clone. It does include all of the plugins that have been submitted in an Open Source fashion. This however can cause pitfalls. As potentially the amount of adopters grows in an exponential way, the amount of plugins they want to add publicly can grow with it as well. Auditing these plugins will take time away from core developers and thus it becomes more intensive to maintain the project itself. When looking at scalability, often numbers are used that present the computing size of the project; the amount of clusters, the RAM, storage, performance. In similar sense it could easily be argued that human time and expertise is also a resource that should be considered when scaling up the project.