So far we have discussed many aspects of Express including its goal, its design and architecture, and we have analysed the software’s component along with its key quality attributes and the quality culture of the project. The resulting picture is one of a robust and solid framework for web application, qualities that indeed earned Express its popularity amongst the web developers community. Nonetheless, we want to conclude this series of essays with a detailed report on Express performance and the framework’s scalability and prospects. The following article shall report on Express scalability challenges, a benchmarking of its performance in terms of what we deem a relevant metric for web frameworks (requests per second), an analysis of the codebase to identify the design choices that may affect the software’s scalability and its current limitations, along with proposed design and architectural tweaks that would mitigate or solve them.

Key Scalability Challenges

As with any web framework, Express has to take into consideration several scalability challenges. After all, no one wants to use a framework that makes their application significantly slower, especially if it is a minimalist framework such as Express. The most commonly tested scalability measures are latency, requests per second, and throughput. These measures are usually tested under varying parameters, such as the size of the web application or the number of concurrent connections, to gain understanding of how scalable the framework is.

The Express repository has an existing benchmark that checks the requests per second achievable for varying amounts of middleware (how many middleware(s) the request goes through). In this benchmark everything other than middleware amount is kept constant at 50 connections over 8 threads for 3 seconds. This might be indicative that the Express team identifies the middleware amount (which one might say is an accurate approximation of application size and complexity) as the main concern when it comes to scalability. In the next section we will examine how Express fares in this scalability dimension, as well as if there are other dimensions that make a difference.

Quantitative Scalability Analysis

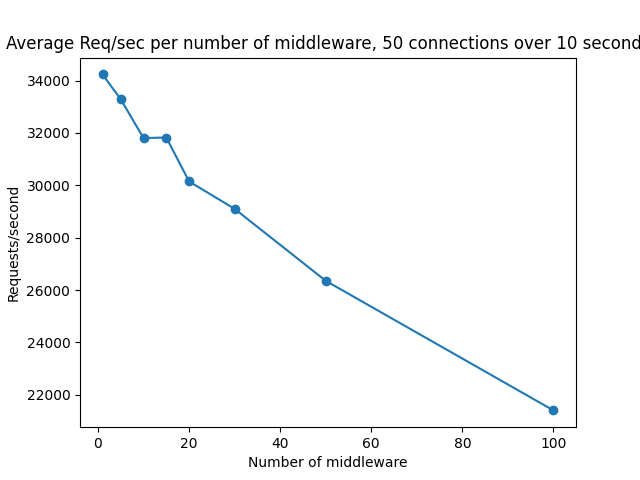

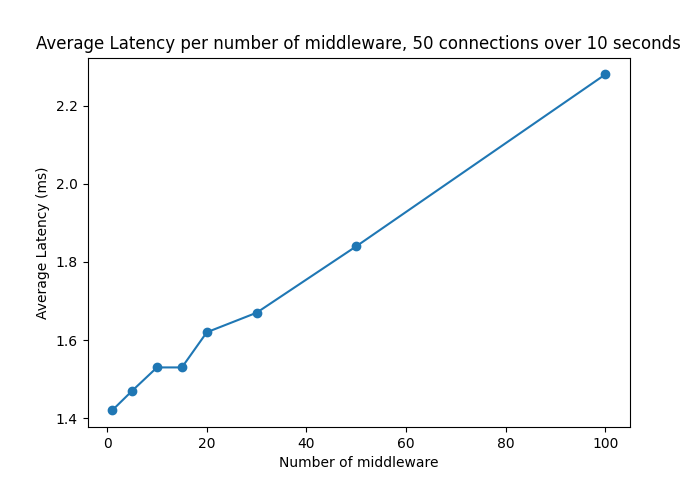

To analyze the scalability of the Express framework we expended on the existing benchmark included in the Express codebase. This benchmark was using an HTTP benchmark tool called wrk which has a multi-threaded design that can generate significant load on a single multi-core CPU and gives detailed statistics about the latency, throughput and requests/second achieved by the application. Firstly we decided to check not only reqs/sec like Express did, but also check for latency. The results were the following:

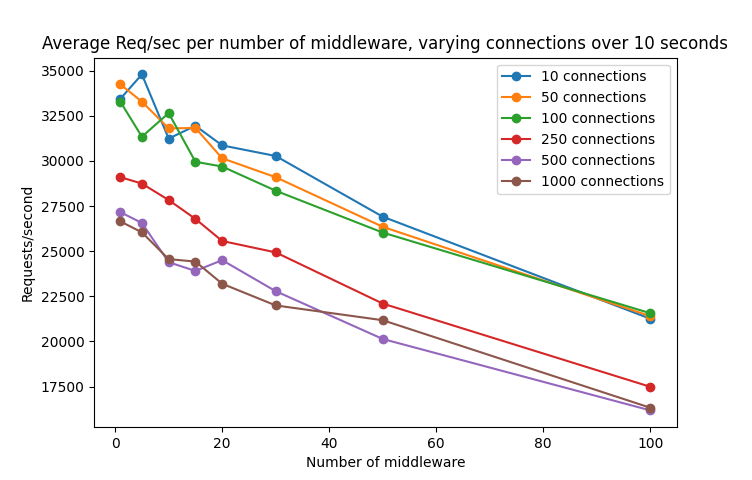

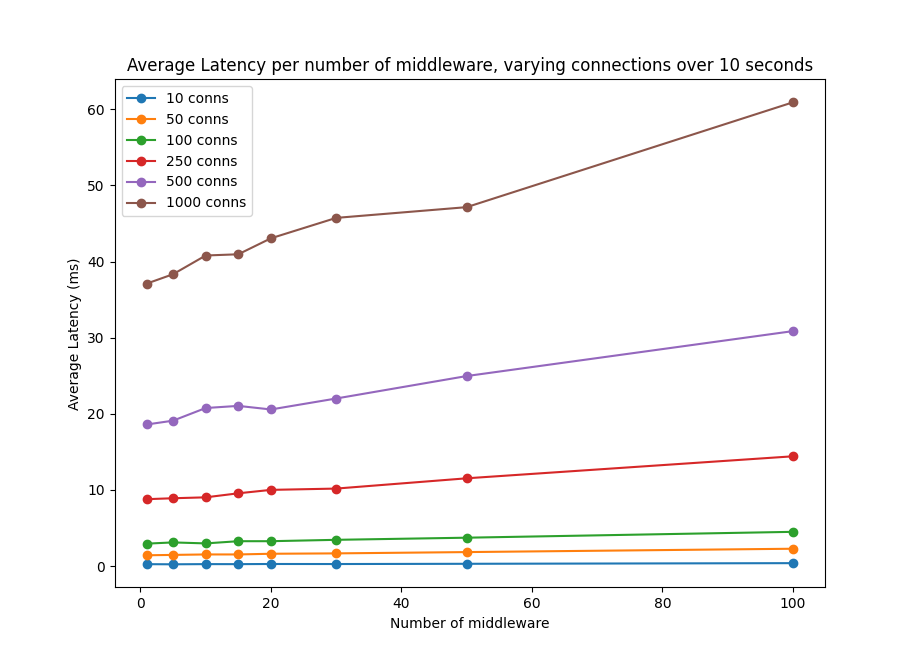

As expected, with more middleware, requests/sec goes down while latency goes up. The relationship seems linear which is also expected since each middleware probably adds a constant overhead. This experiment only checks for 50 connections though, but does the behavior change when there are a larger amount of connections? Let’s see! We repeated the same experiment for varying number of connections to see how that dimension affects latency and reqs/sec. We plotted the same graph as above with a separate line for different number of connections:

As expected, increasing the number of connections increases latency and decreases requests/second. While the effect of number of middleware seems to remain linear, for latency the slope seems to increase. This indicates that the constant latency overhead of each middleware increases with the number of connections, which is arguably bad news. It shows that an increased number of connections is worse for endpoints that have more middleware, which one might argue hurts the scalability of applications with respect to their size.

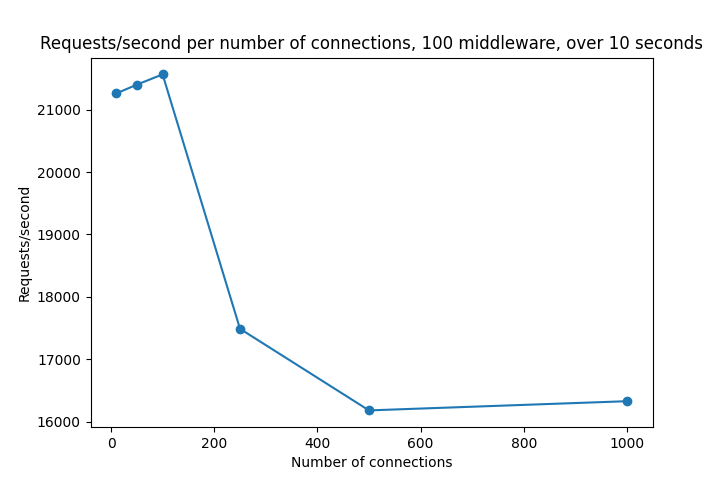

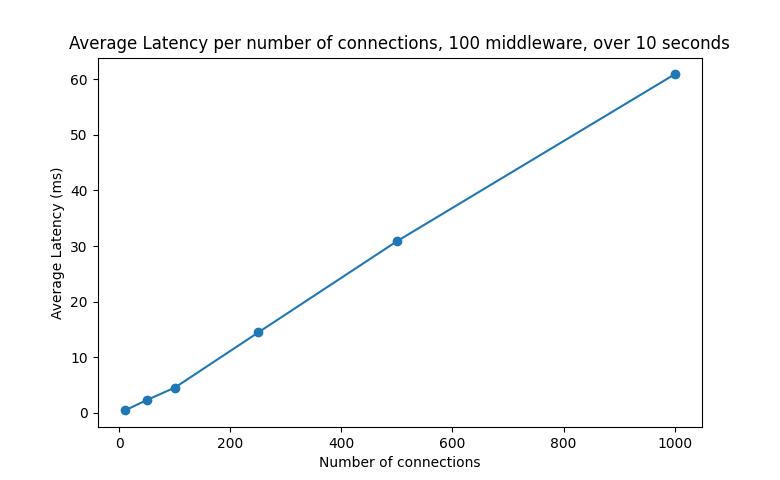

The good news is that when checked by itself, number of connections seems to affect latency linearly. It’s effect on the number of requests per second is less clear however. Obviously the number of requests/second tends to decrease with more connections, but the linearity we see in other graphs is not present in this case. Here are the plots for varying number of connections for the 100 middleware case:

Express’ architectural decisions that affect its scalability.

Based on the metrics mentioned in the previous two sections (requests per seconds) and the results of our benchmarking process, we browsed Express repository to recognise some of the architectural decisions that could have lead to such behaviour.

While analysing the Express codebase and architecture we identified three main bottlenecks that seem to cause scalability issues, such as, Express’s Router implementation (along with the framework general routing approach), the extensive usage of nested closures and the frequent call to JSON.strigify().

The analysing of such details of Express implementation mainly address the time and space complexity of the algorithms employed throughout the framework.

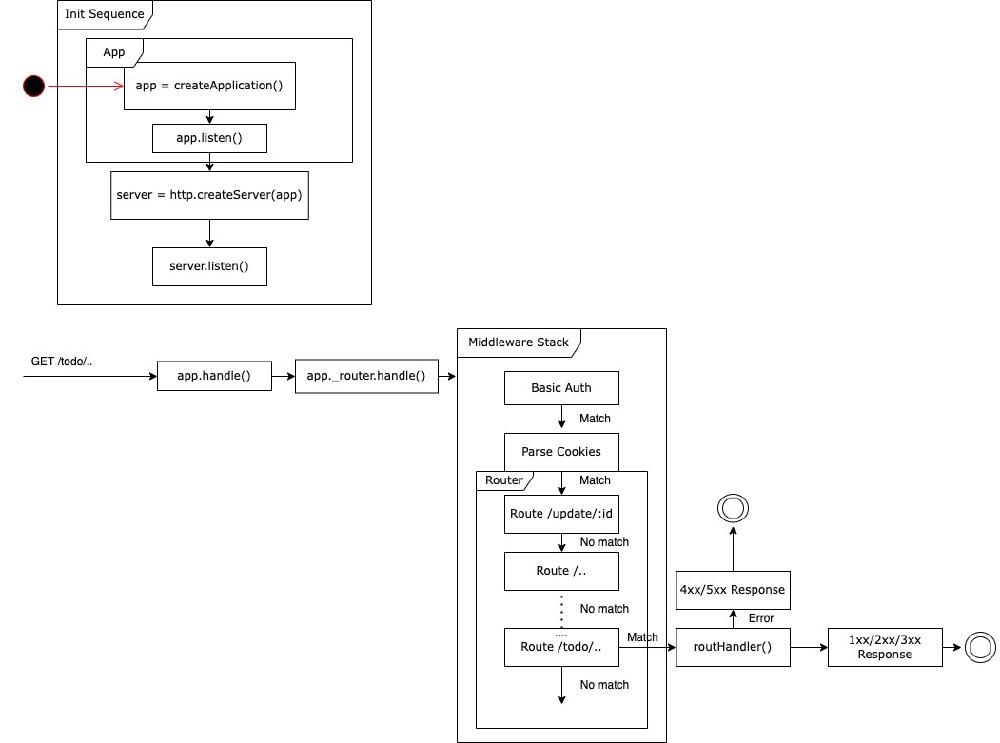

When handling your routing by means of Express router, the performance in terms of requests per second are rather disappointing compared to other faster web frameworks or Node core. This might be due to the memory allocation of the routes and the way exact match or regex based look-up is handled. The routes and patterns are not store in any sort of search-optimised data structure and thus the time complexity grows linearly as the number of routes scale up.

Yet another key detail that affects Express scalability is its extensive use of nested closures when handling synchronicity. This is particularly relevant for big payload and it can be the cause to major memory overload. When callBack functions are called in a nested fashion memory allocated variables (independent of their effective usage and relevance in the consecutive callBacks, remain in scope during the execution of the entire stack frame. This practice prevents the garbage collector to properly optimise for memory usage.

Lastly, Express broadly uses JSON.strigify() to serialise payloads and other objects to share via HTTP.

Although this function is quite popular in many web framework and it is the de facto standard for serialisation, with it being a recursive function it is not per se very optimisable. Nonetheless, this limitation could be partially avoided for small payloads when specifying a scheme for json object serialization.

Proposal for architectural changes

We run our banchmark task on the number of requests that Express can successfully run per second and after having identified three key architectural choices that, in our opinion, lead to such limitations of the framework’s throughout and latency, we propose three design and implementation changes that we deem valuable in order to handle those bottlenecks.

In our quest to find effective solutions to the problems to tackle we found rather valuable to research on how seemingly faster web framework handled similar decisions and how their solution is different to the one implemented in Express.

As mentioned in the previous section, the three main bottlenecks we identified as the framework’s pitfalls are the routing algorithm employed in their Router module, the broad use of nested closures and callBack functions to manage synchronicity and the broad usage of JSON.stringify().

The Router module especially seems to represent an obstacle to Express scalability as it’s routing algorithm is based on a path lookup (both for exact match paths and regular expressions) that scales linearly with respect to the number of paths declared as the router’s routes.

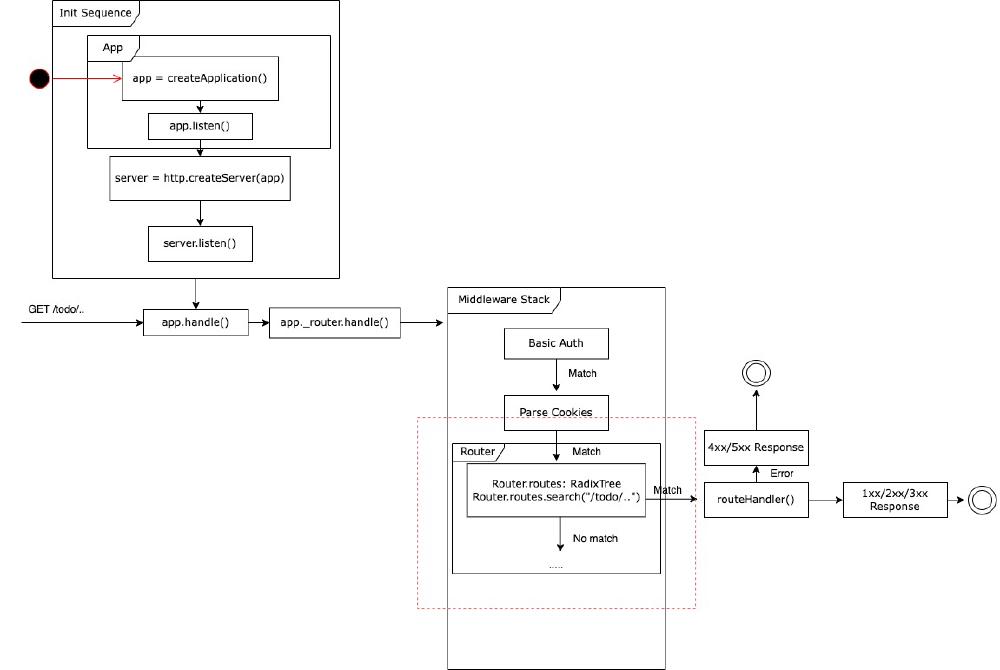

Such lookup, that is now done on an in memory stored list of paths, can be easily speeded up by means of a more search optimised data structure that would allow for lookups with better time complexity than linear. Fastify is reported to be one of the fastest web framework in terms of handled requests per second. Their team mentions as part of its key strength the very fast routing based on find-my-way; a fast HTTP router that uses a highly performant Radix tree (space optimised prefix three) to handle path lookup. Such shift in the internal representation of the routes would allow for a more scalable search algorithm and it would improve Express performance in terms of latency and throughput.

But revising the framework’s used data structures may not be enough. The second pitfall we identified as affecting express’s scalability regards the broad use of nested closures that, combined with possible cumbersome payloads, may result in an unnecessary usage of memory to keep redundant or even unnecessary variables in scope during the execution of the entire stack frame. Simply avoid nested callbacks will ease the garbage collector’s work an improve memory usage.

Finally, Express makes broad use of the JSON.stringify() method to serialise object within the web framework. This method, although it is a quite popular tool for serialisation, has a recursive implementation that makes it rather difficult to optimise. This particular pitfall is one that is common to many frameworks that require serialisation. In order to address this bottleneck the solution might be to include schema-based serialisation of objects that would lead to flexible and optimisable serialisation within the framework itself. This solution is implemented by Fastify’s team by means of fast-json-stringify. The tool requires JSON Schema input to be compiled into a stringify function that is highly performant.

We argue that such improvements to the time and space complexity of the implementation of Express’s core components and tools would greatly benefit its overall complexity.

Impact of the proposed changes

Once having assessed the potential that such architectural changes may bring to Express, it is a different matter estimating the real-word impact that they could bring. For such, we analysed them one by one, highlighting the key implementation differences and their effects over space and time complexities.

To begin with the proposed modification to Express’ routing, there is indeed much to discuss about the current feature’s efficiency. Express usage of a stored list for its defined paths means that the path-matching operation has a time complexity of O(n), where n indicates the number of paths accepted by the router. On the other hand, the introduction of a radix tree data structure to store that information would lead to path lookups in the orders of O(dm), which is now independent of the number of accepted paths (a number that can grow very quickly for any kind of applcation): d refers to the length of the string that is being matched, while m is the size of the alphabet in use.

When dealing with the closures of Express and its potential improvement offered by an avoidance of them, it is difficult to estimate the impact that it could bring to the system. Such usage of nested callbacks is highly affected by the sizes of the payloads, and could not represent an issue if the system under analysis is provided with sufficient memory and sufficiently small payloads.

However, the scenario changes when confronting Express’ current JSON serialization approach with the fast-json-stringify proposed alternative. Express makes extensive use of JavaScript’s built-in JSON.stringify function, which recursively looks up how to map an object’s properties to its data serialization fields, in order to return a JSON representation of the object. This implementation leads to a time complexity of O(n) for the stringification process, where n refers to the number of nodes in the entire object hierarchy: it can grow quickly, resulting in rapidly increading processing times per each object. To avoide the recursive, un-supervised lookup of an object’s properties, fast-json-stringify takes on a different approach and introduces user-defined schemata to speed up the process. Schemata allow developers to define particular JSON structures that an object could be mapped as, thus defining its parameters types and avoiding the recursive look-up entirely and providing constant matching time complexity for every schema-definied object. The benefits of such an approach are evident when dealing with recurrent data formats that need to be sent in response objects, particularly as the size of the payload decreases.