Our team had the opportunity to interview Zef Hemel, former TUD student and now Senior Engineering Lead at Mattermost. The interview covered many different topics, both Mattermost in general and very specific questions about the architecture. However, we mostly tried to direct the interview towards scalability.

As the lead of the platform teams at Mattermost, Zef has a good vision on the architecture and was able to provide us with great insights regarding Mattermost scalability, especially on the server side, as this is what we focus on.

We thus base our essay structure on the story line that emerged from the interview, as we think it can be interesting, original and insightful. Almost all required parts from the assignments are addressed, although arranged in a different manner.

Scalability preparedness

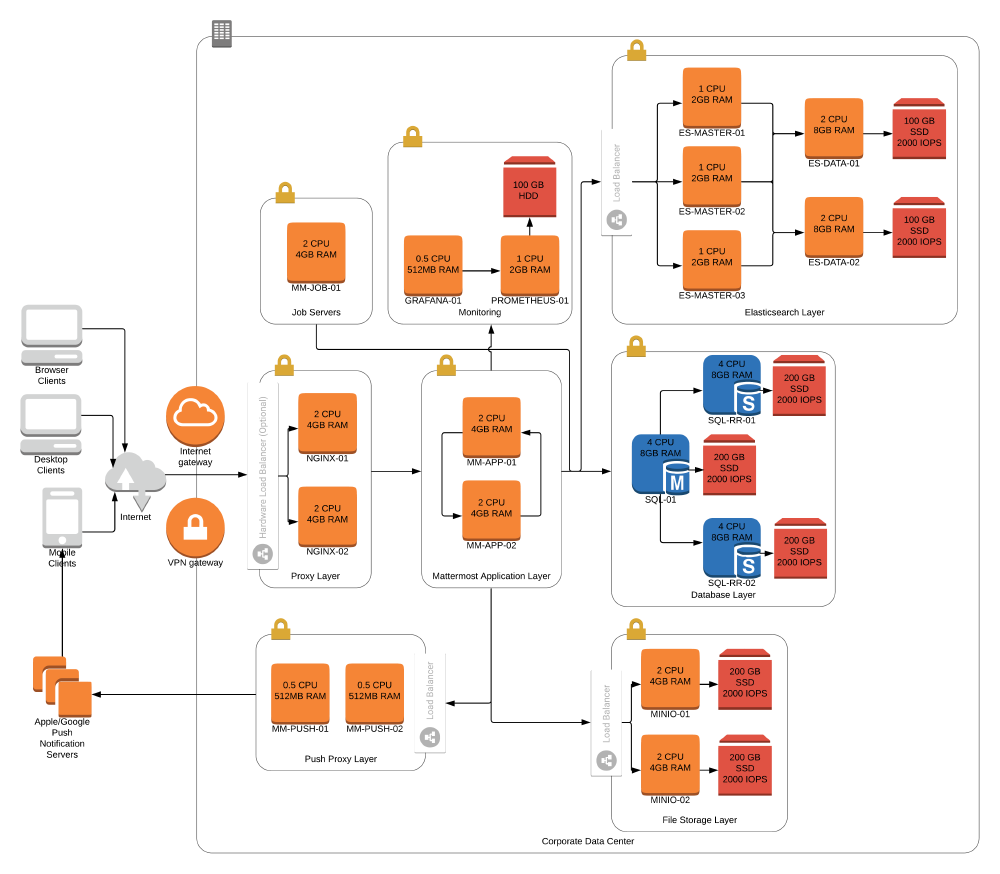

mattermost-server is very well prepared for scalability issues. As we mentioned in previous essays, there are different solutions for customers based on their number of users. Solutions exists for 5,000, 10,000, 25,000 and 50,0001. Figures 1, 2 and 3 below show three examples from Mattermost documentation of

“…suggested architecture configurations enterprise deployments of Mattermost at different scales”1.

Figure: Fig1, General deployment for 5,000 users

Figure: Fig2, General deployment for 25,000 users

Comparing figures 1 and 2 shows the differences between a small-scale solution and a large-scale solution. The general architecture is identical, and the layers are mostly the same. The main difference is that layers have more capacity added to them, which can be allocating machines with bigger RAM/CPU and/or directly increasing the number of dedicated machines for that layer. For instance, the Proxy Layer goes from two machines running NGINX with 2 CPU and 4GB RAM to three machines with 4 CPU and 16GB RAM.

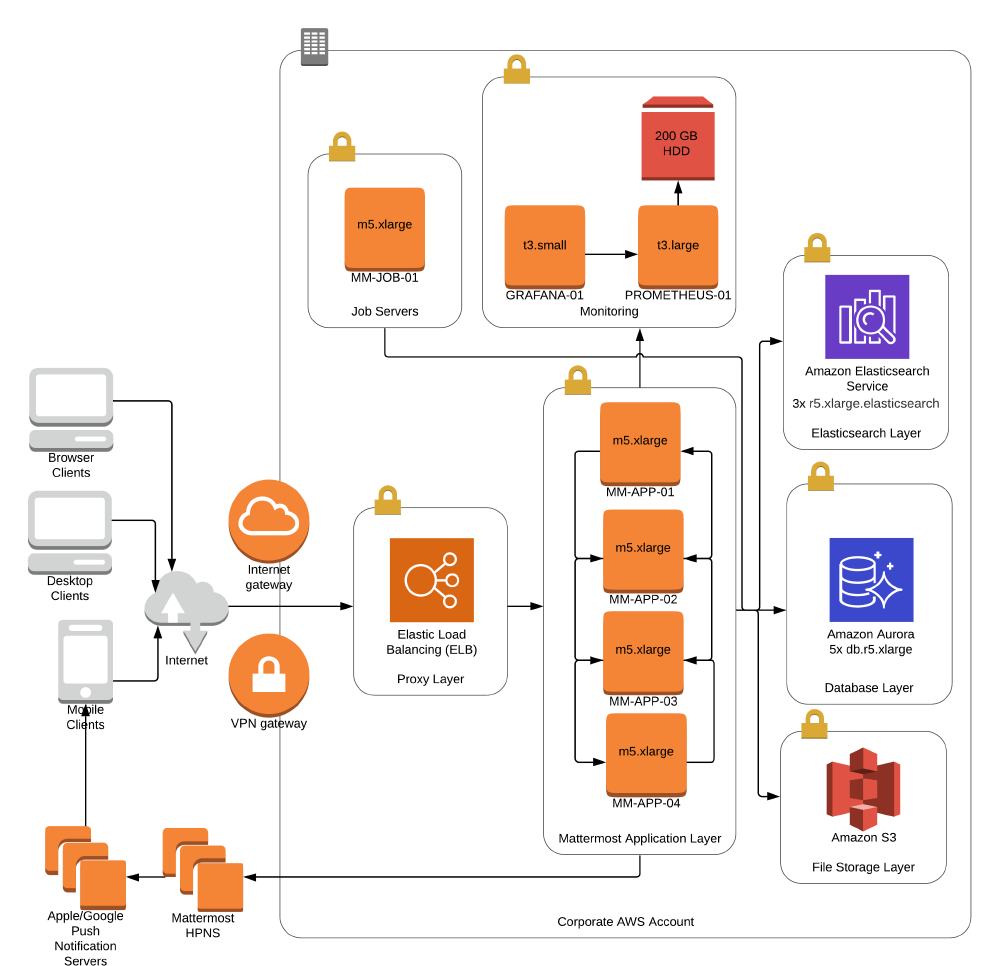

Figure: Fig3, AWS deployment for 25,000 users

The diagram in figure 3 is much more abstract, as the architecture relies on a third-party, Amazon Web Services AWS. Up to 25,000 users, both general and AWS solutions exist. This means that customers can even run Mattermost without any internet connection. However, for more than 25,000 users, only a cloud solution using AWS is possible.

Load tests have been run on Mattermost with 60,000 concurrent users1. The biggest customer of Mattermost has only around 40,000 users2, which means Mattermost are 50% higher in terms of potential scalability than their biggest customer actually needs. This margin can be considered large enough, especially since it is unlikely that any company or institution will have more than 60000 concurrent users in the near future.

As just explained, the Go binary of the mattermost-server does not present any scalability issues regarding the current use of Mattermost. For this reason, mattermost-server does not have any challenge in itself to overcome regarding scalability. However, there are other factors and crucial bottlenecks that can limit the scalability of Mattermost, as will be detailed in the following sections.

Plugins and their effect on scalability

Mattermost allows for creation of plugins - custom programs that extend functionality of the core Mattermost application3. While we mentioned earlier in our posts that plugin system highly increases modularity of the system, it may come with a performance penalty.

Plugins can be enabled in each server configuration individually. When enabled, the plugin’s server binary is started as a process by the mattermost-server – so we end up with separate subprocesses for each plugin. This approach has its own advantages and works just fine if the number of plugins enabled is low. However, the more plugins enabled, the more time the server needs to start up. Furthermore, this may significantly affect costs on the cloud, as mentioned further:

“If you have a cluster of 10 machines (on the cloud) - those are complete replicas let’s say . . . if you have 20 plugins, that means that you spin up a Mattermost server with 20 plugin subprocesses that also use memory on each of those 10 nodes. Which means if you have 20 plugins 10 times - you get 200 instances of plugins running . . . and we actually see that customers tend to stick with Mattermost because they are heavy on the plugins . . . but, even booting one of those pods (server nodes) with all of those plugins starts to become very slow and memory-hungry.”2

There are potential solutions to these problems. As mentioned in the interview, one solution would be to get the plugins built by Mattermost out of the plugins model and build it back into the core. There are other solutions for external parties - Mattermost created Apps Framework that allows to “Extend and integrate Mattermost with apps written in any language and hosted anywhere”4.

Under the Apps Framework, developers can use any language that supports HTTP and their apps be deployed separately from Mattermost. Inherently, using external apps as web services does not affect the server bootup time. Additionally, as they get deployed separately, this reduces the costs on the cloud due to lower memory consumption. The actual difference in integration between the plugin model and apps is shown in the figure below. Apps Framework has its drawbacks – one cannot directly alter User Interface of Mattermost as with plugins5. Furthermore, if one needs low latency with the server, plugins still remain a more preferable choice. However, Apps Framework is going to improve with the introduction of more UX (User Experience) Hooks5.

Figure: Fig4, Difference in integration structure between plugins and apps

Service oriented architecture

Having a monolithic architecture is working great for the adoption of Mattermost by the community. As many of the users and customers deploy it themselves on their infrastructure, convenience is very important and having a single compiled Go binary works towards this goal.

“. . . we want to ship a single binary, point it at the right database, this is the frontend bundle, and boom!…”2

This will continue to be the same for the foreseeable future of Mattermost.

Although the monolith is working in favour of some clients, offering their services in the cloud presents new challenges to the Mattermost team. As Zef mentioned, Mattermost started out as a single-tenant6 system that is an isolated deployment for each customer. As a result, today, for every customer that chooses to use Mattermost Cloud, a new instance is spun up with a new database in order to have an isolated system from other customers of Mattermost cloud. As illustrated in figure 5, this presents difficulties in scaling for increasing users on Mattermost cloud, not in terms of throughput or performance, but in regards to infrastructure and costs. Users on a free tier just trigger the creation of these new pieces of infrastructure and may not end up using them, which is a very plausible scenario in freemium-style offerings, and needs intelligent ways of optimization to cut down costs.

Figure: Fig5. Single vs Multi tenancy architectures

“If we had built for cloud since the beginning, we would have a multi-tenant system…”2

A possible solution for this would be to embrace a service-oriented multi-tenant architecture just for Mattermost Cloud. The team’s goals are to logically separate different functionalities of the server into separate components and have well defined interfaces which would enable an easier migration to a microservices architecture if needed in the future. In such scenario, multiple customers of Mattermost Cloud would share the same infrastructure and the same instance of a database while being logically separated from each other. From our analysis of important pull requests from the previous post, some work is already going on towards this objective. Refactoring is being done in the code to simplify future work by the teams building microservices7.

Database bottlenecks

We saw that Mattermost is very well prepared for supporting a large volume of users for a given customer. The team has detailed outlines of architectures, and has performed extensive load testing beforehand for up to 60,000 concurrent users1. While the customers have not complained about the scalability issues of Mattermost, there is one bottleneck that is relatively common in most customers’ deployment models.

“. . . most of the issues end up being at the database layer, and is fixed by adding an index or something similar…”2

There are usually easy fixes for this issue, but there have been some features that tend to take an unusually heavy toll on the database. Thecollapsed reply threads feature, which is in beta at the time of writing, seems like a straight-forward feature at first glance.

“. . . in reality, this is extremely complex to do and scale. (It needs managing of) all users and their subscriptions to all the threads, and who should get notifications. This quickly grows into a lot of tables (and joins). This gets very costly for the database…”2

Such features are usually kept in the beta stage until they can be well optimized. Mattermost communicates the risks of enabling such features and warns customers to expect increased demands on the server and database resources8. Currently, enabling this feature mandates a scale up of the database instance. Possible alternative solutions for this could be query-level optimizations, modifying existing database schemas, or caching the users’ subscription to reply threads.

Ideal further steps for Mattermost

From the very valuable insights we gained from talking to Zef, and our evaluation of the Mattermost system, the scalability issues with Mattermost are rarely a direct result of the mattermost-server component. The volume of concurrent users is also not an issue, given multiple tested configurations of deployment. Instead, the architectural decisions regarding auxiliary interactions like the current plugin model, the eventual move to cloud with a single-tenant monolith, and the growth of newer use-cases present problems concerning the efficiency, performance (startup times), and the costs of the broader system.

We tried to understand what could be the ideal path if Mattermost hypothetically had infinite time and manpower to build, modify and revamp their software. According to Zef, they would accelerate what they are doing today, and would not take another direction. Here is how further steps for Mattermost would look like:

- Respecting Conway’s law, reorganize the teams across the company, refactor

mattermost-serverinto logically separate and well-defined interfaces, to ease the transition to a microservices architecture supporting multiple tenants if needed in the future. Achieve more granular control over each functionality in the process. - Revamp the plugin system by bundling first-party plugins with the single Go binary and asking third-parties to host their own plugins, enabling better startup times and reducing costs on the cloud.

- Continue to optimize database interactions to ease the most common pain point for customers.

In conclusion, Mattermost has good directions to move towards and improve the efficiency, performance and costs of their offerings in multiple scenarios. Some of these undertakings are achievable solely by improving their architecture, while some require the cooperation of third-party companies that build integrations. We expect to see ever-evolving improvements that are motivated by the changing needs of the market space.

-

Mattermost Docs. Architecture overview. https://docs.mattermost.com/getting-started/architecture-overview.html ↩︎

-

Information from the interview of Zef Hemel. ↩︎

-

Mattermost. Mattermost Marketplace. https://mattermost.com/marketplace/ ↩︎

-

Mattermost. Announcing developer preview of the Mattermost Apps Framework and serverless hosting. https://mattermost.com/blog/mattermost-apps-framework-serverless-hosting-preview/ ↩︎

-

Mattermost Developers. Mattermost Apps. https://developers.mattermost.com/integrate/apps/ ↩︎

-

Cloudzero. Single-Tenant Vs. Multi-Tenant Cloud: Which Should You Use? https://www.cloudzero.com/blog/single-tenant-vs-multi-tenant ↩︎

-

Pull Request. Changing from public to private a lot of methods in App layer. https://github.com/mattermost/mattermost-server/pull/18395 ↩︎

-

Mattermost Docs. Collapsed reply threads - Known issues. https://docs.mattermost.com/channels/organize-conversations.html#organize-conversations-using-collapsed-reply-threads ↩︎