Previously we discussed what the Moby project is, how it relates to its downstream product Docker and how its vision helped shape the modern containerization ecosystem. This time we will discuss the architectural decisions made by the Docker team and describe the structure of the ecosystem.

Although Docker can work with many operating systems that provide process isolation capabilities, the rest of the essay will be mainly presented from the perspective of the Linux container variant since it’s most well studied and most widely used.

System architecture and design principles

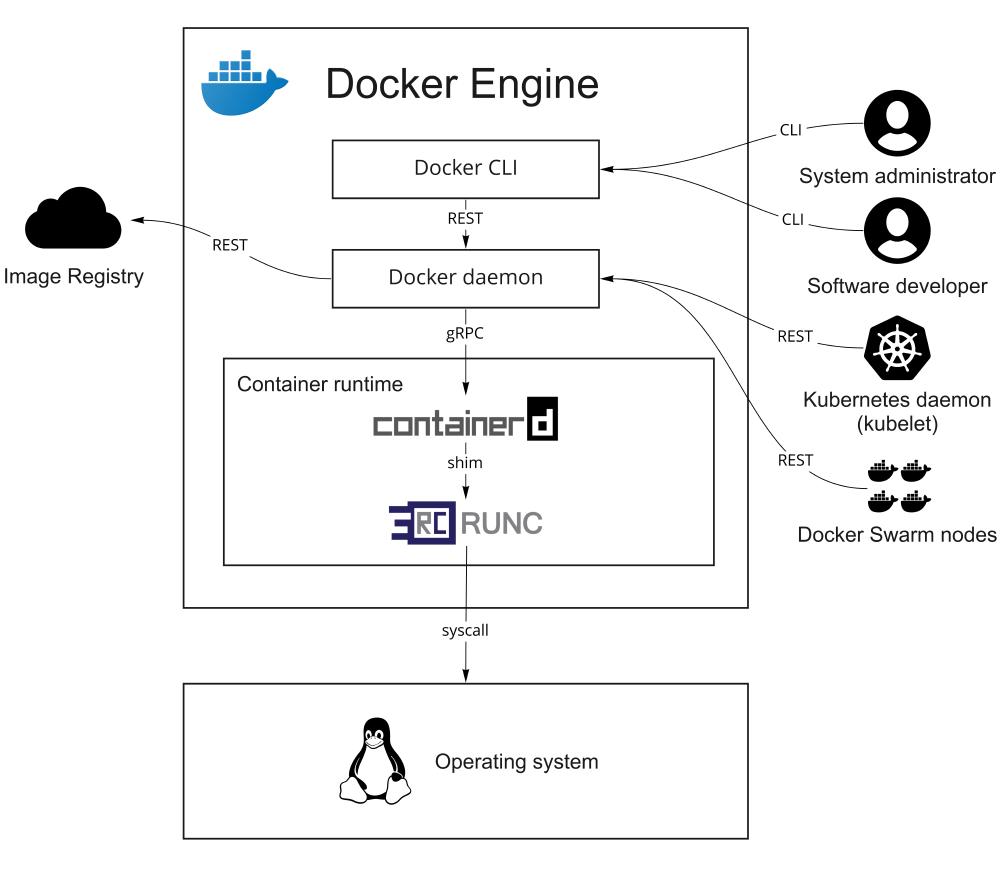

Figure: Context view

The architecture of the Moby project1 revolves around the concept of the Docker daemon - the process that governs all the aspects of the container lifecycle and provides a layer of abstraction on top of the operating system’s process isolation features.

The containers are created from templates called images. Each image consists of layers, which are combined during container creation. They form a filesystem that the processes inside of the container will access instead of the host one. This means that unless specific host filesystem directories are explicitly mapped to the container ones, the processes running inside it will have no possibility of accessing the host files. What’s more, whenever the containerized process tries to execute another binary or use the shared library, it will use the container version instead of the host one. The host operating system does not even have to have such binaries or libraries installed. The containerization makes it so that from the perspective of the process, its environment looks like a dedicated virtual machine - not only it has no access to the host’s filesystem and the devices, processes, and other functionalities that could be isolated.

The immutability of the images and the lightweightness of the container creation process resulted in treating containers as ephemeral beings. Instead of modifying the container’s content manually, it is more feasible to make these changes in the image’s Dockerfile instead and then recreate the container. This is especially important when containers are deployed in bulk on multiple machines. The fact that the images can be defined as Dockerfiles allows storing and versioning them in the code repositories was a huge step in popularizing infrastructure as a code (IaaC) approach.

Containers view

Figure: Containers view

The Docker engine consists of two primary containers. It employs a server-client structure - the daemon implements the functionality, and the CLI functions as its interface. The daemon manages local Docker objects like images, containers, networks, and volumes2. By exposing the standardized API, the daemon allows the CLI and other programs to interact with it - for example, when forming a cluster of daemons.

Docker daemon uses container runtime to utilize the operating system’s capabilities for isolating the processes. The first version of Docker released to the public in 2013 had a monolithic structure and communicated directly with Linux’s LXC container runtime. However, to allow for modularity and work with other operating systems, the containerd3 runtime was developed to create abstraction on top of the operating system’s process isolation functionality. It is used up to this day as a main container runtime of the Moby project.

Another essential part of the Docker ecosystem is the image registry. If the daemon does not find the required image in its local storage, it can pull it from the remote registry. This allows for storage savings and avoids manual image deployment on potentially multiple machines.

Components view

Figure: Components view

Container images can be defined in a particular text document called Dockerfile4. They declare a base image to use and apply a list of instructions to it. The changes made in the image’s filesystem by each instruction are persisted as a new layer. By applying the differences caused by all the layers in the order of their creation, it is possible to recreate the desired image.

Each image has a manifest that specifies the image name, tag, signature, and digest of its layers. By being a highly collision-resistant hash of the data, digests allow the daemon to verify the correctness of the data pulled from the registry and determine the common layers between the images. This is a highly desirable property since in the case that many images share the common base layers, they can be stored in the registry only once, leading to substantial storage savings both on the registry and the daemon’s side.

Images become containers at runtime. These can communicate with each other thanks to the engine’s networking support. It creates virtual networks where each container has its own IP address and hostname.

Docker daemon has a built-in container orchestration capability called Docker Swarm5. It allows multiple daemon instances to form a cluster and uses the Raft algorithm6 to achieve consensus on the cluster’s state. The daemons can run in two modes: worker or manager. Managers hold and exchange the cluster state and accept administrative requests through the API. Workers have the actual workloads scheduled on them by the managers. Instead of scheduling container creation on a particular machine, engineers can instruct the managers on how many containers of each type should be deployed on the cluster as a whole. The state reconciliation performed by the managers ensures that all the scheduled workloads are operational7.

Volumes are ideal for storing data generated and consumed by Docker containers. Docker manages volumes entirely, which allows them to be independent of the host computer’s directory structure and operating system. Using volumes provides many advantages such as more accessible ways to back up and migrate to other host systems, compatibility with all OSes, safe sharing between different containers, and usage of volume drivers. The most significant advantage provided by volumes is, however, the fact that their content does not depend on the state of the container; in other words, the volume’s contents exist outside the lifecycle of a given container.

Connectors view

The daemon acts as a server within the client-server architecture model and exposes a REST API8. It is mainly used by Docker CLI, a command-line utility that allows users to communicate with the daemon easily. The API allows other systems to integrate with the daemon as well. This includes various monitoring utilities9 and graphical management interfaces10, as well as the container orchestration systems like Kubernetes or Docker Swarm.

This architecture aspect played a huge role in increasing Docker’s popularity since Linux containers are much more popular than Windows ones, and Mac OS does not offer any containerization support at all. By allowing the daemon to run in a dedicated virtual machine, routing the network traffic to the containers that publish ports and mounting directories from the host operating system to the containers on the VM, Docker Desktop11 was created for Windows and Mac users. It also brought the benefits of Linux containers to these machines and provided a seamless user experience for the vast majority of use cases, especially development ones.

Development view

Since most of the Moby project’s building blocks are written in Go, their repositories follow the typical structure of the Go programs. However, unlike most programs written in Go, Moby does not follow the semantic versioning12 and instead opted in for calendar versioning13. This causes problems when handling the project’s dependencies with the standard go mod vendor toolset. Instead, Moby developers decided to rename the standard go.mod and go.sum files to vendor.mod and vendor.sum and provide a dedicated shell script that manages the vendor directory with dependencies in the similar fashion as the standard toolset would do.

Many of the Moby projects provide Dockerfiles, which declare images with all the tools required for development. Not only is this approach an example of “dogfooding”14, but it is also particularly beneficial when developing Docker daemon - it allows to run the daemon as a root in an isolated environment, without risk of damaging the host system files. What’s more, the containerized daemon uses its own state directory and does not cause a mess with the host Docker state directory.

Run time view

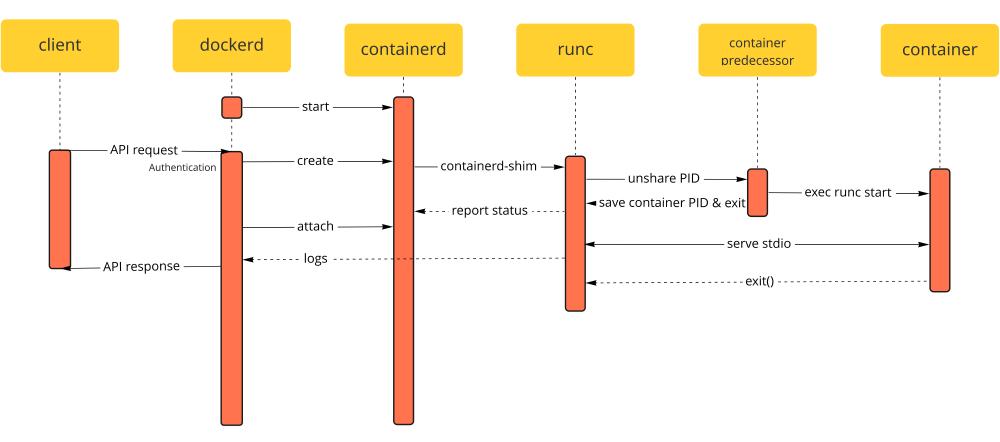

Figure: Sequence diagram of communication between the components during container creation

The daemon can be called through the Docker CLI to build the image described in the Dockerfile. The base image will be pulled from the image registry, and the layers will be applied to create the desired image. This image can now be pushed to the remote registry or used to create a container. The image layers are combined through the union mounting (for example, with OverlayFS15) to recreate the filesystem. Then a new layer is added on top of existing ones, holding changes that the container processes will potentially make to the original image’s filesystem. This approach allows Docker to start many containers from the same image without the extra overhead of creating multiple copies of the original files. The entry point process specified in the Dockerfile is executed when the container is started. The aforementioned process isolation capabilities of the operating system ensure that the process cannot access the majority of the host system’s resources, even if it’s running as the root user inside the container. However, it is possible to grant the container access to specific host system directories thanks to the volumes. This approach allows the data created by the isolated process to persist, even after the container is recreated.

We can use the Docker CLI to see which containers are currently running and interact with them further - for example, to see the standard output logs, monitor the resource usage, and stop them.

Realisation of key quality attributes

Our previous essay analyzed Moby’s principles: modularity, batteries included but swappable, usable security, and developer focus. From these, we identified the following key quality attributes: modularity, composability, ease of integration, usability, security, and reusability.

Modularity of the system is ensured in multiple ways. First of all, by separation of the code. Our project focuses on the daemon that resides in another repository16 as opposed to the CLI and containerd.

Containerd used to be part of the daemon itself. However, with the creation of the Open Container Initiative17 (OCI) its specification has been standardized and extracted to separate project under the Cloud Native Computing Foundation (CNCF) supervision18 19. This allowed for further modularity. Although Moby and Docker still use containerd by default, it is possible to use other OCI-compatible runtimes as well. What’s more, it allowed other projects like Kubernetes to integrate with containerd directly and simplify their architecture. The process isolation, which is the core part of containerization, prevents the containerized process from having access to the process tree, mount points, and devices of the host operating system. Therefore, an adversary who has access to the container doesn’t have immediate access to the host system.

Because the daemon has a REST API, it is possible to develop different client applications that use it. Docker CLI and Docker Desktop can make the project usable for different target groups. Maintainers who want to script automatic deployment or run Docker on machines over an SSH connection can use the CLI commands to deploy containers. However, users who are uncomfortable with the console or have a different operating system than Linux can use Docker Desktop instead. Similarly, any group with its own use case can integrate its solution with the daemon thanks to its API.

During Docker’s development, several components were separated from it to allow for standalone usage, containerd being the most prominent example. However, there are more components like that under the Moby project umbrella. Examples include the libnetwork Go library20, which allows to model networks between the containers, as well as individual modules in the main Moby repository. They are responsible for things like image building21, integration with container runtime22, image registry23 and can be reused in different projects as libraries.

API design principles

The API of the Docker daemon24 is defined using the OpenAPI standard (previously known as Swagger). This provides a template that can be used by various technologies to deterministically generate API calls and code representation of the payloads in different programming languages. Combined with statically typed languages like Go, it ensures that the majority of the breaking changes will be captured during compilation.

What’s more, the OpenAPI definition allows to generate API documentation websites and test suites easily. In order to ensure API stability, the integration tests of the daemon are executed directly against the API, not only through Docker CLI.

-

https://docs.docker.com/get-started/overview/#docker-architecture ↩︎

-

https://docs.docker.com/engine/swarm/how-swarm-mode-works/nodes/ ↩︎

-

https://docs.docker.com/storage/storagedriver/overlayfs-driver/ ↩︎

-

https://www.docker.com/docker-news-and-press/docker-extracts-and-donates-containerd-its-core-container-runtime-accelerate ↩︎