Essay 3: Quality and Evolution

If you have any experience with a container orchestration tool, you know it can be challenging at first, using a daemon or configuring Containerfiles, but the added benefit outweighs the development costs. In the previous essays, we gave a contextual overview and architectural analysis of the Podman project. In this essay, we question the satisfaction of the system’s key quality attributes and how they are safeguarded over time.

As depicted in the figure, we previously identified that the key quality attributes are compatibility, modularity, and security. We then discussed how the architectural design choices of Podman impact these attributes, and now we analyse how and to what extent Podman implements them.

Figure: Podman’s key quality attributes.

Podman’s Key Qualities

Although Podman differs with Docker’s functionalities, with Docker Swarm1 and Podman pods, it strives to be fully compatible with Docker, ensuring that the migration between services is easy. However, it does not have any direct automatic compatibility check to ensure this. We identify which other methods Podman uses to ensure this quality.

Previously we identified that the architecture was built with modularity in mind. However, does this hold for code level? Modularity in applications can be quantified by analysing source code for refactorability.2 We perform static analyses that evaluate bugs, complexity and overall technical debt, from which we identify the level of modularity of the system.

Podman offers improved security by avoiding an attack surface like a daemon. The complete application must be secure, otherwise, this benefit becomes obsolete. Security has no concrete metric that can be automatically tested, at least not for the main security benefit, which Podman adds.3 However, a SonarQube analysis showed no potential security threads.4 Furthermore, we identify Podman’s other means to ensure a secure application.

Managing Podman’s Quality

With tens of releases, hundreds of contributors, and thousands of pull requests, Podman engineered a series of processes which ensure that the quality of the product matches their ambitions. This section provides an overview of the quality assurance practices of Podman and how they influence the final product. We consider the quality practices that open-source developers adhere to when contributing to Podman. Further sections dive deeper into individual processes like the CI pipeline and the state of the tests.

Podman aims to build a product that is not only appealing to new contributors but also technically sound and sustainable. To achieve this, the developers have established clear contribution protocols. These include templates for contributions and pull requests, as well as information on the critical testing infrastructure and how to write appropriate tests. Any willing developer can contribute to the Podman system while following the established quality standards with access to this information. These procedures include testing and documenting every newly added feature in a standard fashion.

Prow Robot (k8s-ci-robot) is a Kubernetes based CI/CD system which Podman uses to monitor and coordinate the code review process through GitHub actions. It performs jobs such as test execution, PR labelling, and merging. The manual review process is outlined in a long checklist to ensure each merged PR is correct.5

However, too much rigour in the contribution process can cause rigidity and stifle the creativity of developers.6 The team introduced several flexible alternatives at different points to mitigate this. For instance, developers can disable the requirement for adding tests by using the [NO TESTS NEEDED] tag.

CI process and Testing

Building a reliable CI process and test automation system for complex projects like Podman is challenging. At the early stage of Podman (the libpod project), there were several reasonable CI solutions like Travis, PAPR, and Openshift, that contributors considered. Eventually, Podman chose Cirrus CI, which has outstanding speed, reliability, and flexibility. Podman takes advantage of this system in three aspects 7:

- Easy image orchestration for executing tests in different environments.

- Powerful and simple manipulation of image running configurations.

- Clear visualisation of the result of CI tasks.

The development community benefits from this system, especially from its visualisation features. Developers can see the CI task results grouped by script blocks and have a status graph that continuously publishes the main branch’s health condition. The core contributors use it to track down problems and closely follow the development community.

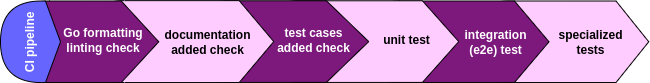

The CI process built on Cirrus CI comprises 57 different tasks ranging from setting up check automation and building Podman to tests execution, which can be categorised into six key parts, shown in the figure below.

Figure: Podman’s CI pipeline for automatic quality control.

After successfully building Podman with multiple configurations, all unit tests are executed in different environments (Fedora 33, 34/Ubuntu20.04, 20.10) in parallel, followed by the API test. The integration and system tests are run in both root and rootless environments. Integration tests account for most of the time in the pipeline. Finally, the upgrade tests are executed to test the stability when upgrading from three milestone Podman versions (1.9.0, 2.0.6, 2.1.1). All tests must succeed for the pipeline to pass.

We found that the total coverage of unit tests is only 12.5%. Some critical libpod components like container.go, container_internal.go and pod.go are even barely covered (6.9%, 1.7% and 1.2% respectively) by unit tests. However, integration and system tests check most of the key functionalities.

Podman CI and manual reviews do not check unit test cases on new components changes but do force compound tests. This reflects that the team focuses on the robustness of the functionalities in general and does not strive for high statement or branch coverage. The reason for this could be high development cost and that the correlation between test coverage and the number of bugs found through tests is insignificant for mature projects.8

Hotspot components

To be a competitive container orchestration tool, Podman needs to maintain the quality of the project rigorously and continuously in the long term. To analyse how future-proof Podman is, we look at the program’s entire history.

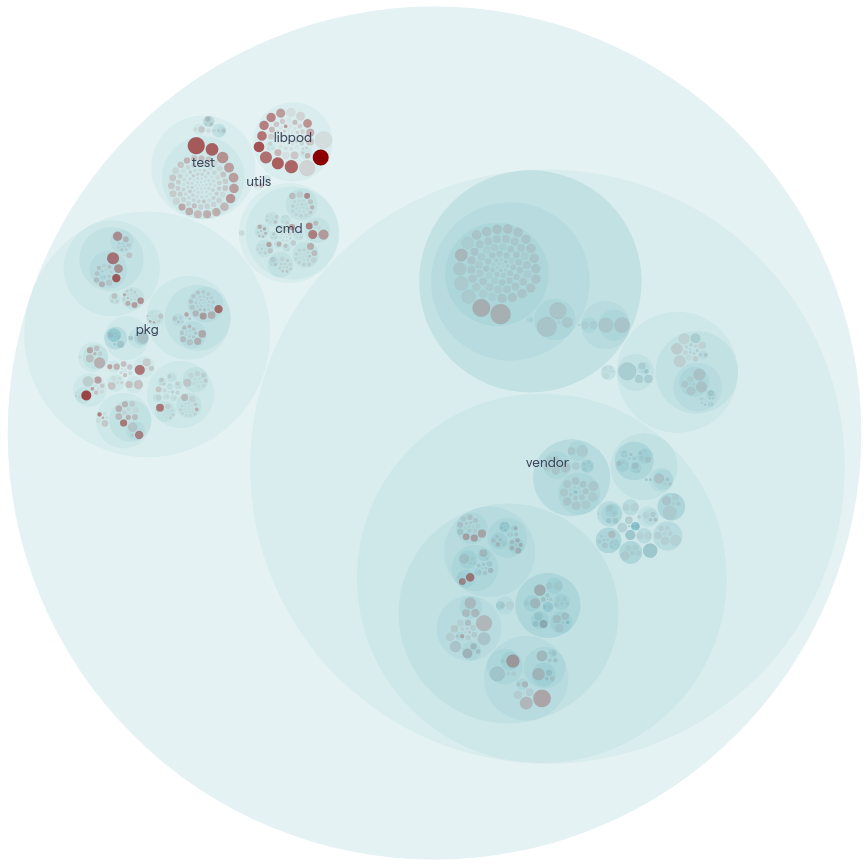

CodeScene is a tool for identifying and prioritising technical debt in a software project. It analyses the code on a social level by looking at the developer’s interaction with the source code.9 In the figures below, we show the results from this analysis, which portray hotspot files in a project. Hotspot files are frequently changed and take up the most development costs, which makes them preferable targets for refactoring.10

In the overview figure, it becomes clear that although vendor dependencies are a large part of the project, they only have a small impact compared to the main libpod library of Podman. This is confirmed by decreasing the code health filter from CodeScene displayed in the second figure.

Figure: Hotspot analysis overview.

Figure: Podman’s main hotspot files.

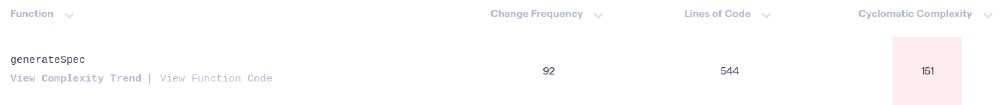

Zooming in on the main hotspots, we find, for instance, container_internal_linux.go. This component has large functions with high cyclomatic complexity. It is frequently changed and heavily coupled with other files, which makes the cost of improving the health of the code high, as shown in an example in the figure below.

Figure: Example of hotspot function with high change frequency and cyclomatic complexity.

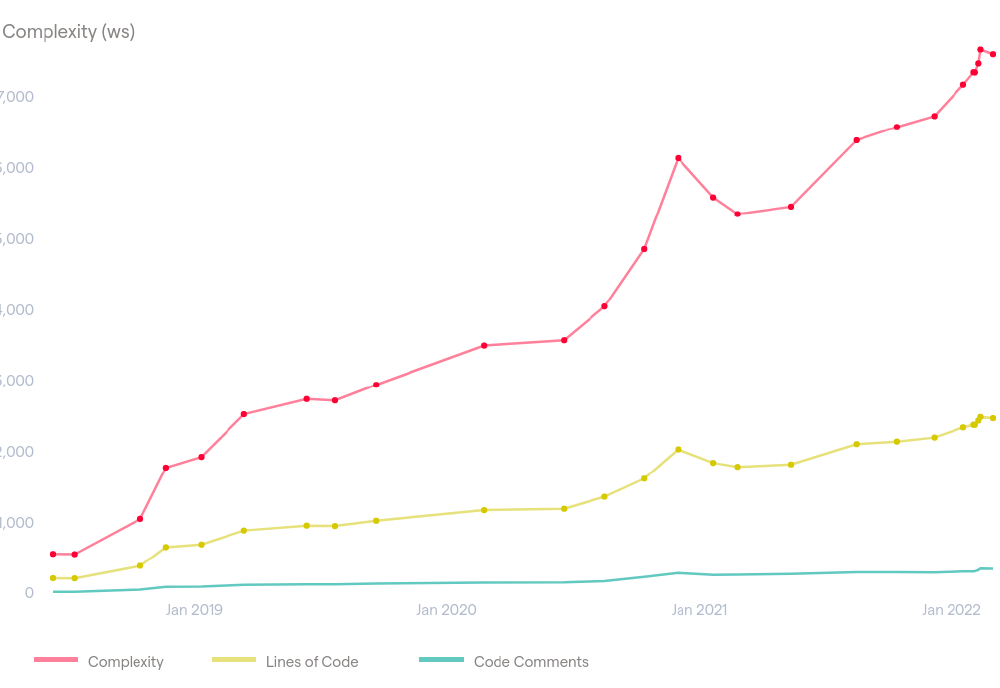

Important files should be controlled while the current complexity trend is rising, as displayed in the figure, which means it is deteriorating11. As modularity is a key quality attribute, refactoring large components like this should have high priority.

Figure: container_internal_linux.go complexity trend.

Podman can benefit from introducing some of these analyses to identify modules which they could enhance automatically. These analyses could drive improvements by empirically checking the static code quality.

The Quality Culture

To assess the quality culture behind Podman, we analysed over 30 architecturally significant pull requests and issues by studying their comments, interactions, reviews, and evolution. We highlight a set of interesting aspects and extract the recurring quality-related patterns.

The most crucial aspect of the PR review process is its thoroughness. Every line of the added features is reviewed by one or more of the lead developers at RedHat. Everything from test quality to line spacing and correct grammar is considered and analysed by the project owners, spotlighting the attention to detail at Podman.

Another essential attribute of the quality culture is the high level of communication occurring in both issues and PRs. Everything from the formal requirements to the feature direction and comment formatting issues, is debated at length. Both open-source developers and Red Hat employees participate in the discussion, which can span several months. An excellent example is PR 1385, in which five developers left almost 200 comments in over two months.

PRs 12429 and 2940 and issues 6480 and 13242 are embodiments of the quality culture at Podman. Both PRs focus on improving the critical quality attributes of the system and span several months of discussion. The former improves the security by limiting the access of the podman image scp command’s access to the user namespace. The latter significantly improves the modularity of Podman, removing the internal firewall network package and relying on the CNI alternative instead.12 The issues also highlight the importance of compatibility for Podman: the former is a request to expand compatibility in a Kubernetes environment through easier initialisation. At the same time, the latter extends Podman compatibility with standard Docker commands by providing equivalent behaviour.

The maintainers’ enthusiasm is the best indicator of the importance of quality in the Podman developer culture. In PR 2940, one core maintainer happily concludes:

It’s done. It’s finally done. We don’t have to maintain that code anymore. Immense thanks for @giuseppe for handling this

Furthermore, it is interesting that the developers track flaky issues, which are actively discussed. These issues are hard to fix because they are difficult to reproduce. Podman is prone to these problems as it is highly configurable and runs on different flavours of Unix operating systems.

Technical Debt

Any large project is bound to have some technical debt, and Podman is no exception. Podman contains several large files with too many responsibilities, which increases code debt. This can cause a sizeable cognitive load for the contributors and be challenging to maintain in the future. Additionally, if developers want to make changes to these files, they must adapt the many coupled components that rely on them. This is called divergent change, where refactoring one function means that many other functions would have to be changed.13 Furthermore, there are 72 files within the libpod directory, making it difficult to find specific files and navigate through them. One way to mitigate this issue is for developers to restructure the directories according to functionality. However, this requires many changes in the import structure of the project, which leads to high development costs.

Apart from code debt, the project also has a large automated test debt. We analysed the coverage of 137 files excluding dependencies, and overall, 7.89% of the lines are covered, which means a lot of code is not unit tested. Podman relies primarily on E2E tests, but this means it could break due to minor uncaught errors. This can cause problems down the line when contributors inevitably must shift resources to fix newly discovered bugs that the developers could have otherwise caught.

Another module that has technical debt is the Podman API, which has the structural debt within the source code. This makes it difficult to propagate changes in CLI functionality to the API, which disconnects the two. In the Podman API documentation14, we can see that the description of the endpoints is minimal and lacks good examples of usage and failures. This makes the API difficult for newcomers to understand.

Conclusion

We have analysed the quality of Podman source code and its processes and related their design choices to the key quality attributes that we identified. Overall, we find that the core contributors of Podman are highly invested in maintaining the quality of the product via a high-level CI and testing infrastructure combined with rigorous manual review processes.

There are some improvements to gain concerning the development costs due to technical debt. The coverage of the test and the prominent level of manual quality control raises the question of how these scales over time. We will identify these aspects in the final essay.

References

-

Docker Swarm. (2021). Retrieved on March 14, 2022, from https://docs.docker.com/engine/swarm/ ↩︎

-

K. Holtta-Otta, N.A. Chiriac, D. Lysy, E. Suk Suh. (2012). Comparative analysis of coupling modularity metrics in Engineering Design, vol 23, no. 10-11, pp. 790-806, Taylor & Francis ↩︎

-

Y. Cheng, J. Deng, J. Li, S. DeLoach, A. Signhal, X. Ou. (2014). Metrics of Security in Cyber defense and situational awareness, pp. 263-295. Springer, Cham. ↩︎

-

Sonarqube getting started. (2021). Retrieved on March 14, 2022, from https://docs.sonarqube.org/latest/setup/get-started-2-minutes/ ↩︎

-

Kubernetes Code Review Process and testing and merge workflow. (2022). Retrieved March 16, 2022, from https://github.com/kubernetes/community/blob/master/contributors/guide/owners.md#the-code-review-process/ and https://github.com/kubernetes/community/blob/master/contributors/guide/pull-requests.md#the-testing-and-merge-workflow/ ↩︎

-

Sommerville, I. (2011). “Chapter 24: Quality Management”. Software Engineering (9th ed.). Addison-Wesley. pp. 651–680. ISBN 9780137035151. ↩︎

-

Chris Evich (18 Mar 2019) CI, and CI, and CI, oh my! (then more CI). Retrieved on March 18, 2022 from https://podman.io/blogs/2019/03/18/CI3.html ↩︎

-

P. S. Kochhar, D. Lo, J. Lawall and N. Nagappan, “Code Coverage and Postrelease Defects: A Large-Scale Study on Open Source Projects,” in IEEE Transactions on Reliability, vol. 66, no. 4, pp. 1213-1228, Dec. 2017, doi: 10.1109/TR.2017.2727062. ↩︎

-

CodeScene getting started. (2021). Retrieved on March 14, 2022, from https://codescene.io/docs/getting-started/index.html ↩︎

-

Codescene Hotspots (2021). Retrieved on March 14, 2022, from https://codescene.io/docs/guides/technical/hotspots.html ↩︎

-

CodeScene Complexity Trends (2021). Retrieved on March 14, 2022, from https://codescene.io/docs/guides/technical/complexity-trends.html ↩︎

-

The Container Network Interface. (2022) CNI Firewall Plugin. Retrieved March 18, 2022, from https://www.cni.dev/plugins/current/meta/firewall/. ↩︎

-

Shvets, A. (n.d.). Divergent change. Refactoring.Guru. Retrieved March 15, 2022, from https://refactoring.guru/smells/divergent-change ↩︎

-

Podman Community. (n.d.). Provides an API for the Libpod library (4.0.0). (2021). Retrieved March 10, 2022, from https://docs.podman.io/en/latest/_static/api.html ↩︎