Scalability is a crucial factor for any software solution in the world today. Whenever scalability is being addressed, it usually means that the software should respond the same for 100, 10000 users and so on, as it responds for 1 user. Be it a streaming service or an e-commerce website the ability to cater to a dynamic number of users directly correlates with the key performance metrics of platforms. In terms of workload and resource utilization, unlike cloud-based testing software, Robot Framework doesn’t fit the quintessential meaning of scalability. As a test automation tool it is designed to be locally installed in the users’ systems.

However, RF does fit another aspect of scalability, i.e. in terms of the capability to support elaborate test scenarios to test software which have numerous features and cater to huge user bases. This essay discusses the impact on resource utilization while running such tests through RF on a user’s system. In addition to the said performance metrics this essay discusses feature scalability and scalability of availability. We would also explore certain architectural decisions that were taken to ensure performance optimizations.

Key challenges

The set up of multiple testbeds is a challenge because it requires heavy installation work. This is because when testing a web application, as is often the case, different testbeds would require different driver versions corresponding to the browser version.1 Managing all these dependencies becomes an overhead for the QA personnel. Having a customizable installation file that could take care of all dependencies out of the box would greatly alleviate the problem.

Another key issue (issues#4110) is the lack of in-built support to run tests in parallel. Parallel execution is essential to reduce the execution time of test scenarios that have elaborate steps. Thus having parallel testing capability would greatly optimize the number of hours a big test cycle takes. But RF natively doesn’t support parallel runs of test cases. The lead developer justifies this by saying proper implementation of the same is rather complicated. There are issues (issue#1791) related to the lack of parallelism that have been open for several years now.

Scalability and Performance evolution:

RF is an offline software tool. Its performance and scalability are determined by the user and how they construct their test suites and execute those tests. It also depends on dependencies needed to accomplish this task. However, the rebot container has gone through several iterations of development since the software’s inception in order to improve Robot Framework’s scalability and performance. Since it is in charge of rendering and reporting logs in the form of locally hosted web pages, it could really consume a lot of memory and processing power as compared to other modules in RF.

Back in 2009, before the release of RF version 2.6, an issue #336 was filed to suggest an upgrade to the rebot tool, to include a customizable test argument regulating the degree of detail in the final test reports. Another issue #849 was dedicated to sorting test reports based on Tags. All these issues were created to improve the tool’s scalability. With the release of version 2.6 alpha 1, the above mentioned issues were handled.

Followed by that, in an effort to reduce the overall test execution time, issues #1791 and #1792 discussed how to process user keywords in parallel on different threads. These functionalities were intentionally removed due to the constraint that keyword libraries were not thread safe through issue #490 in release RF 2.6. Given the effort necessary, these concerns are still open as low priority enhancements.

Apart from these issues, users began to notice a significant increase in memory usage with newer versions after RF 3.2.2. The performance lag was attributed to the rebot tool. The corresponding issue, as well as the remedy for it, is outlined in issue #4114, which was discovered using many profiling tools and addressed through multiple commits for release RF 4.1.2. To detect memory utilization bottlenecks, the lead developer used the Linux time /usr/bin/time -v utility as well as the python memory profiler tool Fil 2. The primary cause of the problem was discovered to be Keyword.teardown introduced in version RF 3.2.2. Memory utilization has decreased by 33% compared to RF 4.1.1 and 20% compared to RF 3.2.2 with the final patch.

Recently, a contributor recommended minor performance and speed enhancements in ‘utils’ for version RF 5.0 via pull request #4256. These adjustments too were aimed to improve the rebot tool’s performance. Using flame graph analysis 3, it was discovered that the timestamp to secs routine takes up 25.46 percent of rebot execution time, while write over threshold in robot/reporting/jswriter:87 takes up 39.94 percent. With these bottlenecks identified, viable enhancements to speed up those two methods were discovered, and a patch has been committed for the RF 5.0 release milestone that was just launched.

Figure: Flame Graph Analysis

Performance Analysis

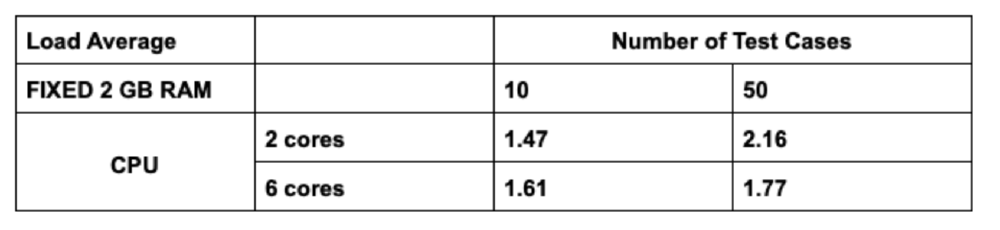

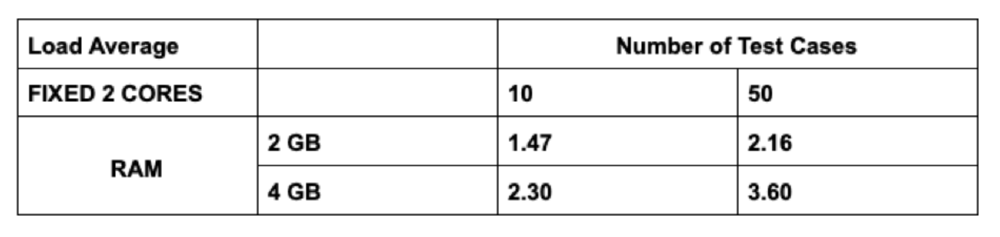

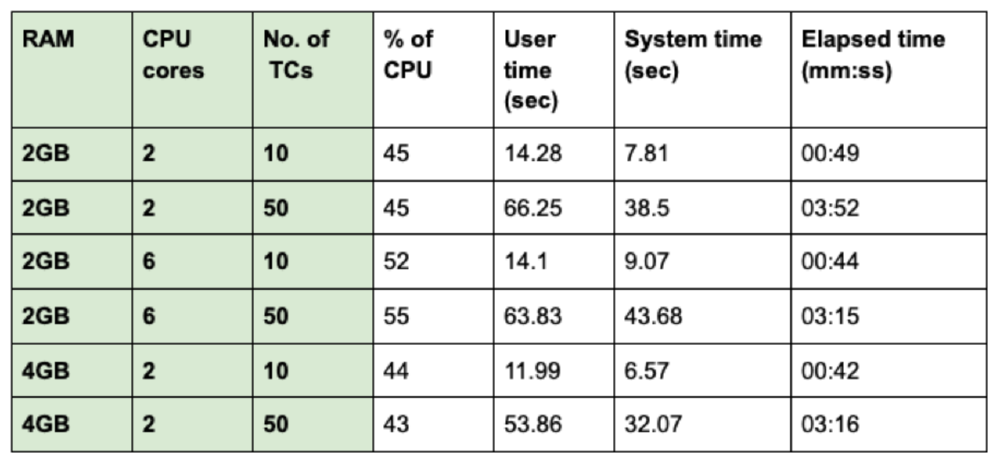

For our analysis, we tested RF with various core and RAM configurations using an Ubuntu Virtual machine. We use one of the RF’s real-time applications to verify the operation of a website. We automated the login and add to cart functionality for a website that was available for testing - saucedemo. We performed the test case for numerous iterations to simulate multiple test cases running in a single run, and we evaluated for 10 and 50 test cases respectively.

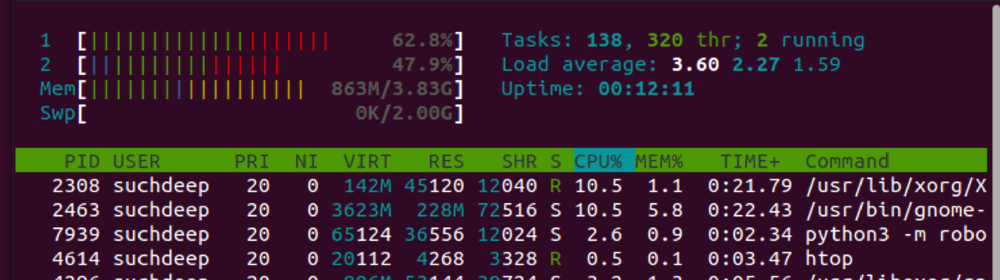

We analyzed the performance by first measuring the timing details with /usr/bin/time 4and then measuring the load parameters with htop 5. Load is a measure of the amount of computational work that a computer system performs. Note that loads can exceed the total number of cores meaning that processes have to wait longer for the cpu. 6

Figure: Load analysis of CPU and Number of Test Cases

Figure: Load analysis of RAM and Number of Test Cases

Figure: Timing Analysis

Figure: htop Tool Output Sample

Impact of RAM

With more RAM, CPU load increases (Load 4GB > Load 2GB), as we have more data to access. However with reduced memory access delays, we get net performance improvement and less net elapsed time. We see that the CPU usage percentage also decreases with more RAM, as the system can store more application data. This reduces the frequency of internal data transfers and new memory allocations/access, giving CPU a much-needed break. Another interesting observation was that under low RAM memory scenarios SWAP memory was used indicating its importance.

Impact of CPU Cores

Upon increasing the number of cores we see the net load decreases (not apparent in lower number of test cases but significantly for larger number of test cases). Furthermore, increasing cores reduces elapsed time in all cases. This is due to the fact that more parallel instructions can be processed in multiple cores. In summary, RF tool throughput can be ma by multicore throughput maximizing capabilities.

Impact of Number of Test cases

We see that the load increases as the number of test cases increase, this is due to increased computational need because of larger data to process. Additionally we see both increase of RAM and CPU cores have a positive impact on performace for both test set sizes (decrease in elapse time).

Steps towards supporting scalability

Pabot

While in-built support for running test cases in parallel is not supported in RF, there is an external tool called pabot 7 which helps achieve the same functionality out of the box. Multiple pabot test suites can run in parallel as opposed to the sequential test runs of robot suites.

Feature scalability

This aspect of scalability 8 is defined by the flexibility of a software to easily accommodate new features. For each new feature if the codebase requires major refactoring, the code illustrates low scalability. On the other hand a no-touch plug-in architecture illustrates high scalability.

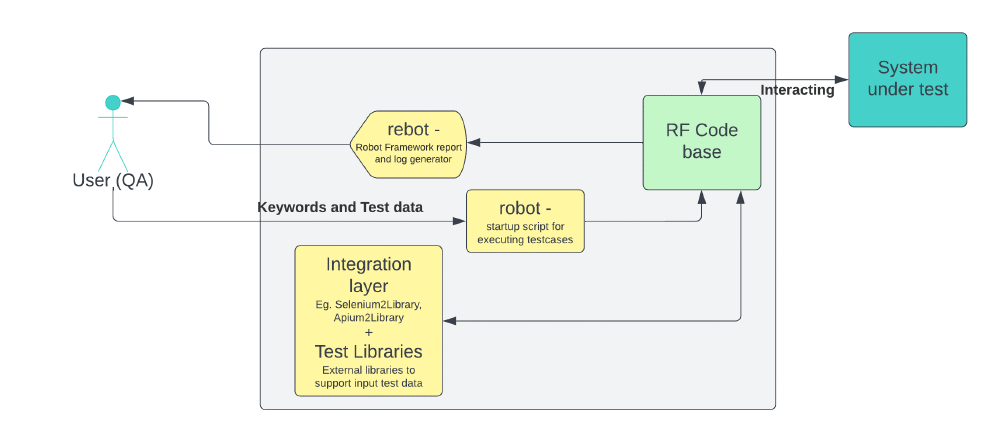

A new feature for a testing framework would be synonymous to easy integration of a new system under test. As elaborated in previous essays, the modular architecture of RF allows limitless extensibility with minimal requirement of code refactoring.

Suggested Solutions

Robot Framework Cluster Architecture

We discussed in previous sections that the robot framework is a local tool with sequential test running capabilities. From a point of scalability this limits the performance. To explain this let us take an example. In the figure the test suite consists of multiple test cases with their dependency as shown. Now in the current context we need to run each of these tests locally in our machine too sequentially. A possible sequence is A -> B -> D -> C -> E. Hence not only do we have to run these tests locally on a single machine, we cannot parallelize either. When we think about testing in an environment where multiple stakeholders are present who are probably running the same thing on different individual machines, the current approach is falling short on utilizing this shared capability. Furthermore, this also leads to unnecessary overall space requirements since each user initializes all the library instances for each test/suite locally.

Figure: Example Test Suite

Hence we suggest a cluster based distributed infrastructure of robot framework that can leverage computational capabilities across the grid thus greatly reducing performance requirements in terms of computation, space etc. on individual machines. Furthermore, this will also improve load balancing (in terms of testing resources) and parallelize the process which leads to decrease in testing time. The only drawback could be increased network requirements and better load management structure.

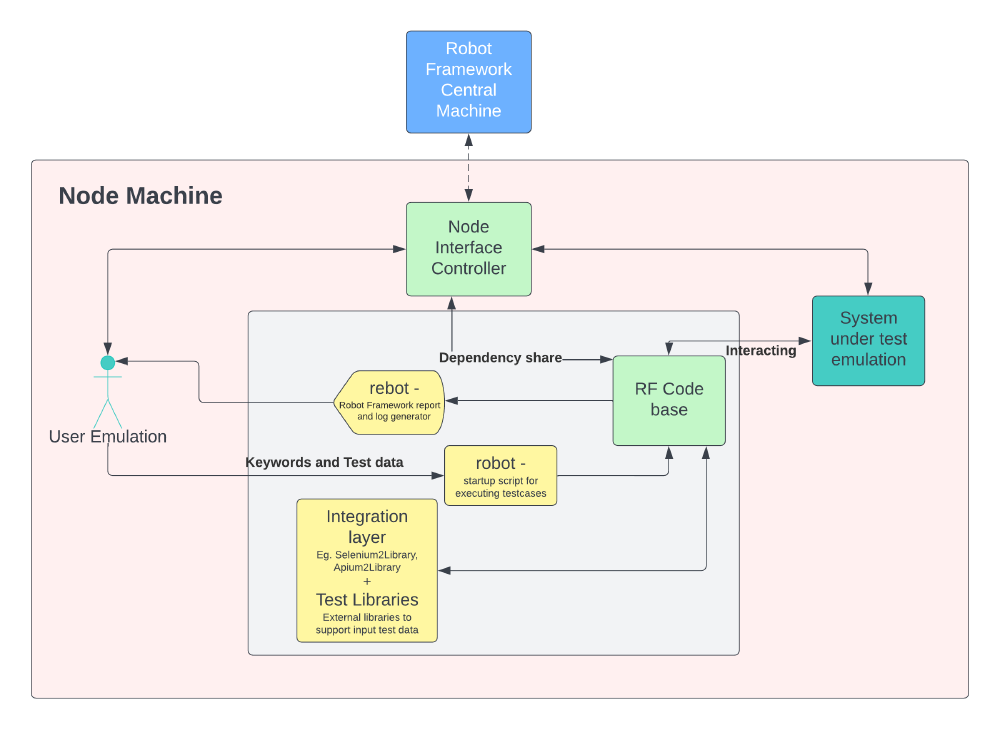

Figure: Robot Framework Cluster Hub

This infrastructure can consist of a centralized command machine which breaks the test suit in the constituent test cases and then forward the request to node machines which evaluates their respective test cases and return the outputs back to centralized command machine. Central Machine can communicate with local node interface controllers to share necessary information. The node controller in turn can emulate a sub-environment with select test cases to run on local node machine. More about how internal process works can be found in one of our older essay on architecture.

Figure: RF Existing Architecture

Figure: RF Cluster Node Architecture

RF Dependency Manager

As we discussed earlier, setting up environment and dependencies are quite a hassle. To make things easy, a customizable installer can be included taking care of all dependencies out of the box.

Adding performance tests to the quality processes

There have been occasions when users have noticed an increase in memory usage and performance lag following a certain release, as stated above in the Scalability and Performance Evolution section. So, in addition to the architectural changes previously discussed, a change in quality culture could be implemented to ensure that future releases are not prone to memory or performance degradation. Monitoring performance could flag additional bottlenecks. Resolving the said bottlenecks could even end up boosting the performance of future releases. Improving RF’s overall performance is an effort towards enhancing scalability because faster test runs would make executing greater numbers of test scenarios more feasible.

We suggest creating an additional step in the CI/CD pipeline which would run performance and profiling tests, as mentioned earlier, before merging every pull request. This step would keep a check whether any change is contributing to performance degradation. Additionally a separate issue for enhancing performance can be added to the milestones for each release. The suggested would ensure that each release is progressive in terms of scalable and high-performing software.

References

-

Building Scalable Robot Framework - https://www.copado.com/devops-hub/blog/building-scalable-robot-framework-test-automation-benefits-and-challenges-crt ↩︎

-

Python Memory profiler - https://pythonspeed.com/fil/ ↩︎

-

Flame Graph Analysis - https://www.brendangregg.com/flamegraphs.html ↩︎

-

Unix Time command - https://man7.org/linux/man-pages/man1/time.1.html ↩︎

-

htop command - https://htop.dev ↩︎

-

Monitoring system resources using htop - https://www.deonsworld.co.za/2012/12/20/understanding-and-using-htop-monitor-system-resources/ ↩︎

-

Pabot - https://pabot.org/ ↩︎

-

Aspects of scalability - https://lilly021.com/the-importance-of-scalability-in-software-development/ ↩︎